Resources

Resources

Deliver enterprise-grade agentic AI. Fast, compliant and in control.

One control layer for the entire AI lifecycle. Deploy in cloud, hybrid, or on-prem and accelerate development with built-in security and data controls.

Deliver enterprise-grade agentic AI. Fast, compliant and in control.

One control layer for the entire AI lifecycle. Deploy in cloud, hybrid, or on-prem and accelerate development with built-in security and data controls.

Deliver enterprise-grade agentic AI. Fast, compliant and in control.

One control layer for the entire AI lifecycle. Deploy in cloud, hybrid, or on-prem and accelerate development with built-in security and data controls.

Deliver enterprise-grade agentic AI. Fast, compliant and in control.

One control layer for the entire AI lifecycle. Deploy in cloud, hybrid, or on-prem and accelerate development with built-in security and data controls.

secure

scalable

EU-based

Join 100+ AI teams already using Orq.ai to scale complex LLM apps

Enterprise-ready. Proven at scale.

Recognized as an Emerging Leader and Visionary in the 2025 Gartner® Innovation Guide for Generative AI Technologies.

Enterprise-ready. Proven at scale.

Recognized as an Emerging Leader and Visionary in the 2025 Gartner® Innovation Guide for Generative AI Technologies.

Collaboration

Work as one team

Unite engineering, product, and data teams in one place. Shared context, role-based workflows, and human-in-the-loop feedback that drives continuous improvement.

Collaboration

Work as one team

Unite engineering, product, and data teams in one place. Shared context, role-based workflows, and human-in-the-loop feedback that drives continuous improvement.

Collaboration

Work as one team

Unite engineering, product, and data teams in one place. Shared context, role-based workflows, and human-in-the-loop feedback that drives continuous improvement.

Collaboration

Work as one team

Unite engineering, product, and data teams in one place. Shared context, role-based workflows, and human-in-the-loop feedback that drives continuous improvement.

Reliability

Stay in control

Ship with confidence using guardrails, fallbacks, canaries, rollbacks, plus live tracing and dashboards for predictable outcomes.

Reliability

Stay in control

Ship with confidence using guardrails, fallbacks, canaries, rollbacks, plus live tracing and dashboards for predictable outcomes.

Reliability

Stay in control

Ship with confidence using guardrails, fallbacks, canaries, rollbacks, plus live tracing and dashboards for predictable outcomes.

Reliability

Stay in control

Ship with confidence using guardrails, fallbacks, canaries, rollbacks, plus live tracing and dashboards for predictable outcomes.

Speed

Build & ship 5x faster

Move from idea to production quickly with prompt & dataset versioning, A/B tesing, and automated delivery pipelines without glue code.

Speed

Build & ship 5x faster

Move from idea to production quickly with prompt & dataset versioning, A/B tesing, and automated delivery pipelines without glue code.

Speed

Build & ship 5x faster

Move from idea to production quickly with prompt & dataset versioning, A/B tesing, and automated delivery pipelines without glue code.

Speed

Build & ship 5x faster

Move from idea to production quickly with prompt & dataset versioning, A/B tesing, and automated delivery pipelines without glue code.

Assurance

Enterprise assurance

RBAC/SSO/audit trails, data residency options (EU), and on-prem deployments so security and compliance can say “yes.”

Assurance

Enterprise assurance

RBAC/SSO/audit trails, data residency options (EU), and on-prem deployments so security and compliance can say “yes.”

Assurance

Enterprise assurance

RBAC/SSO/audit trails, data residency options (EU), and on-prem deployments so security and compliance can say “yes.”

Assurance

Enterprise assurance

RBAC/SSO/audit trails, data residency options (EU), and on-prem deployments so security and compliance can say “yes.”

The platform

How it works

The platform

How it works

The platform

How it works

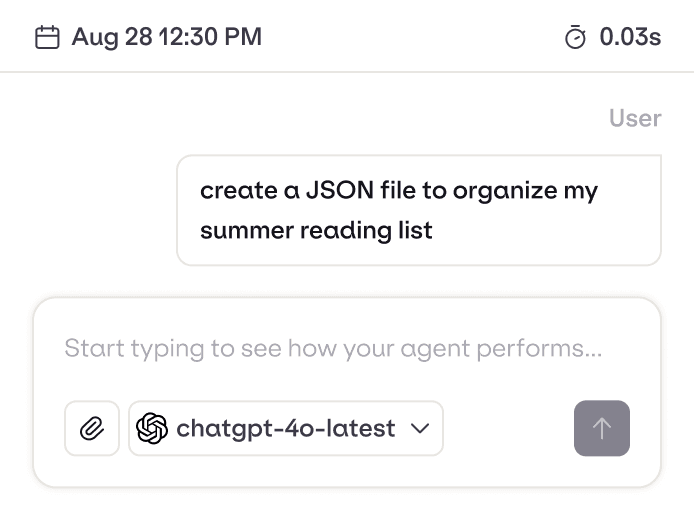

Agent framework

Tools & memory

Real-time orchestration

Agent Runtime

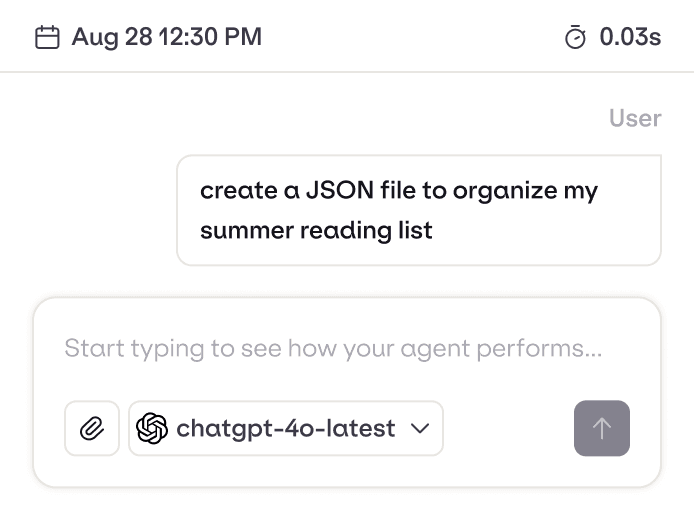

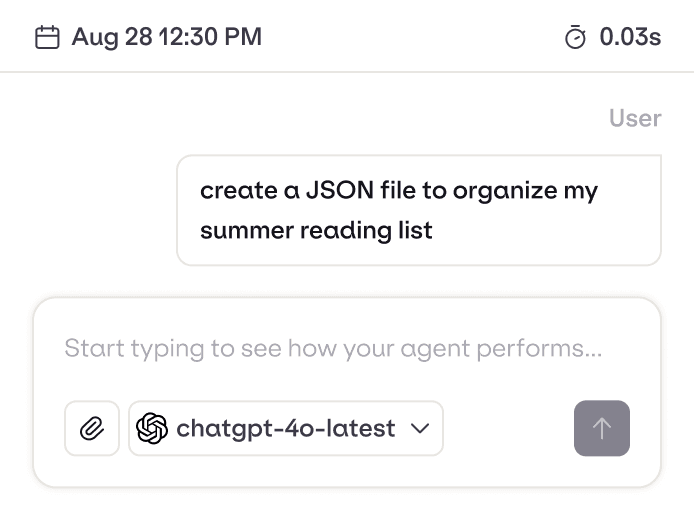

Manage your agent lifecycle. You develop and monitor, Our runtime handles everything else.

LLM evaluators

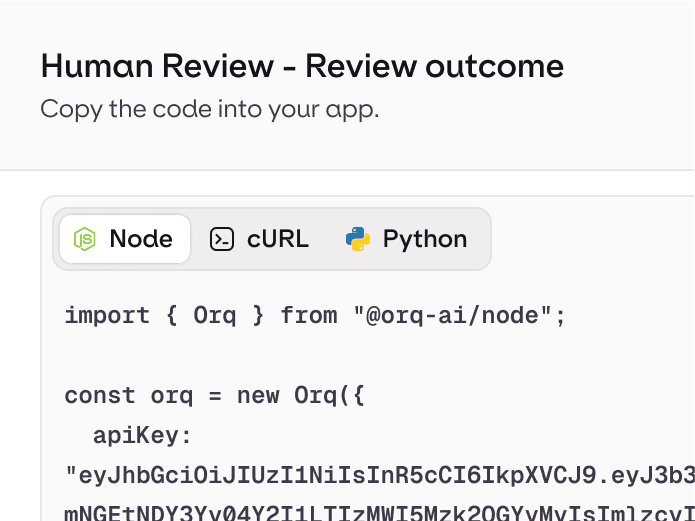

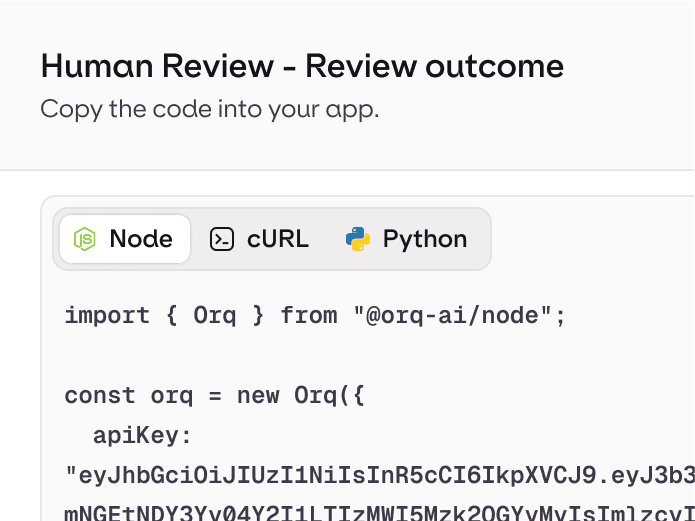

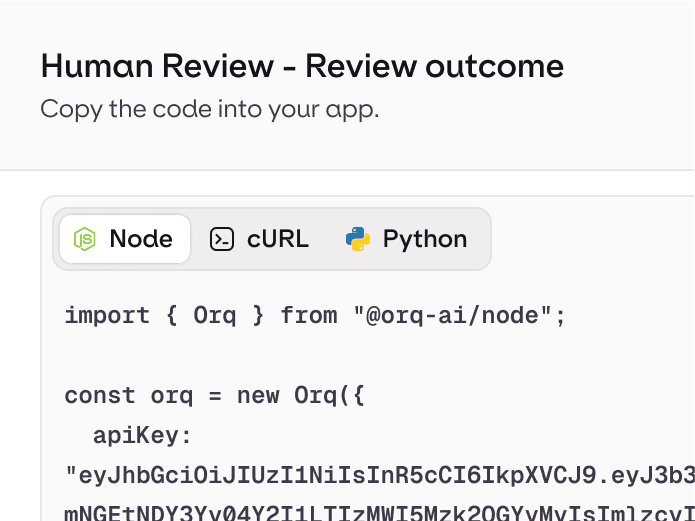

Human-in-the-Loop

Prompt scoring

Golden sets and A/B evals. Human review where risk is high wired into delivery.

Model routing

Failover & caching

Unified control

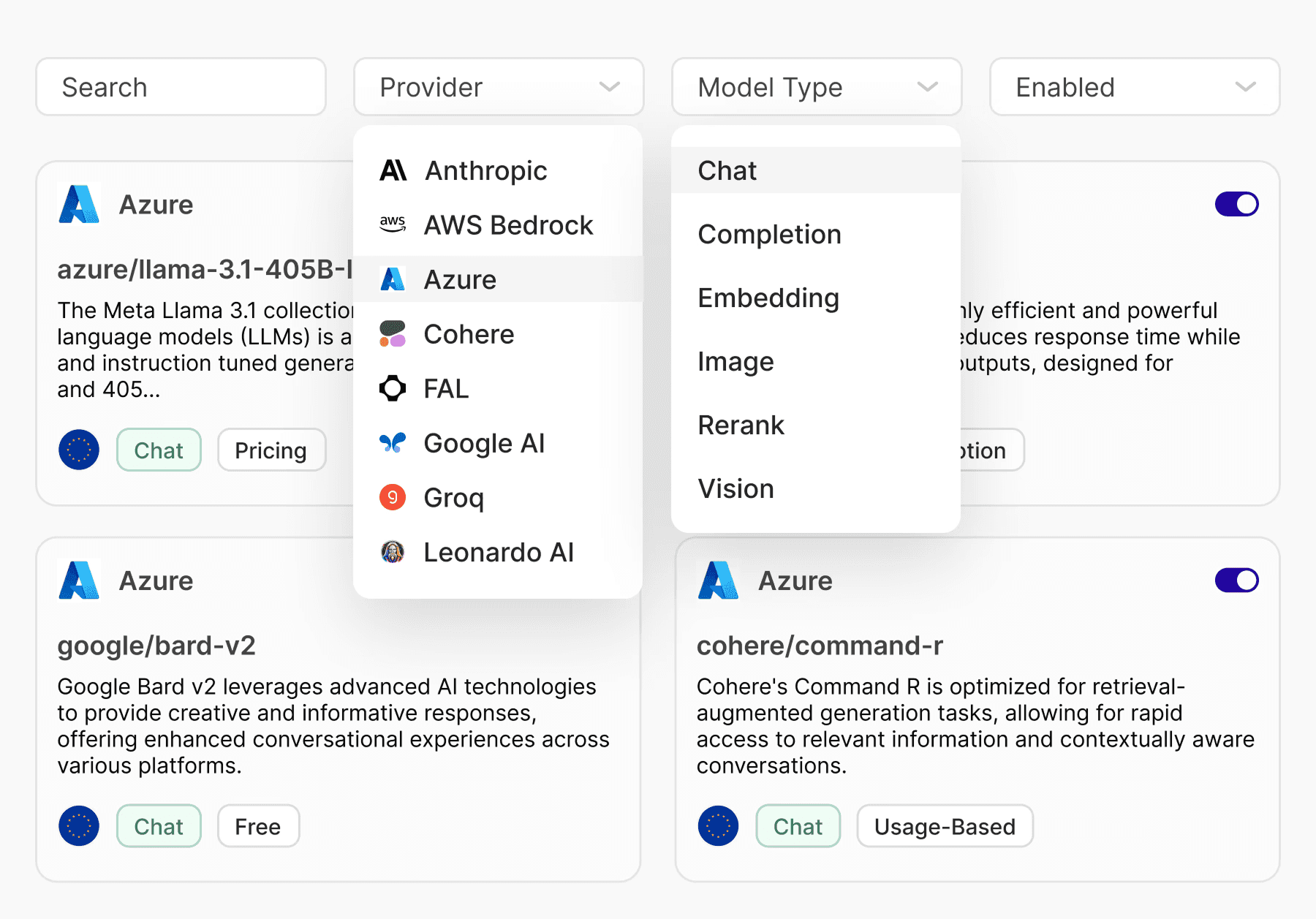

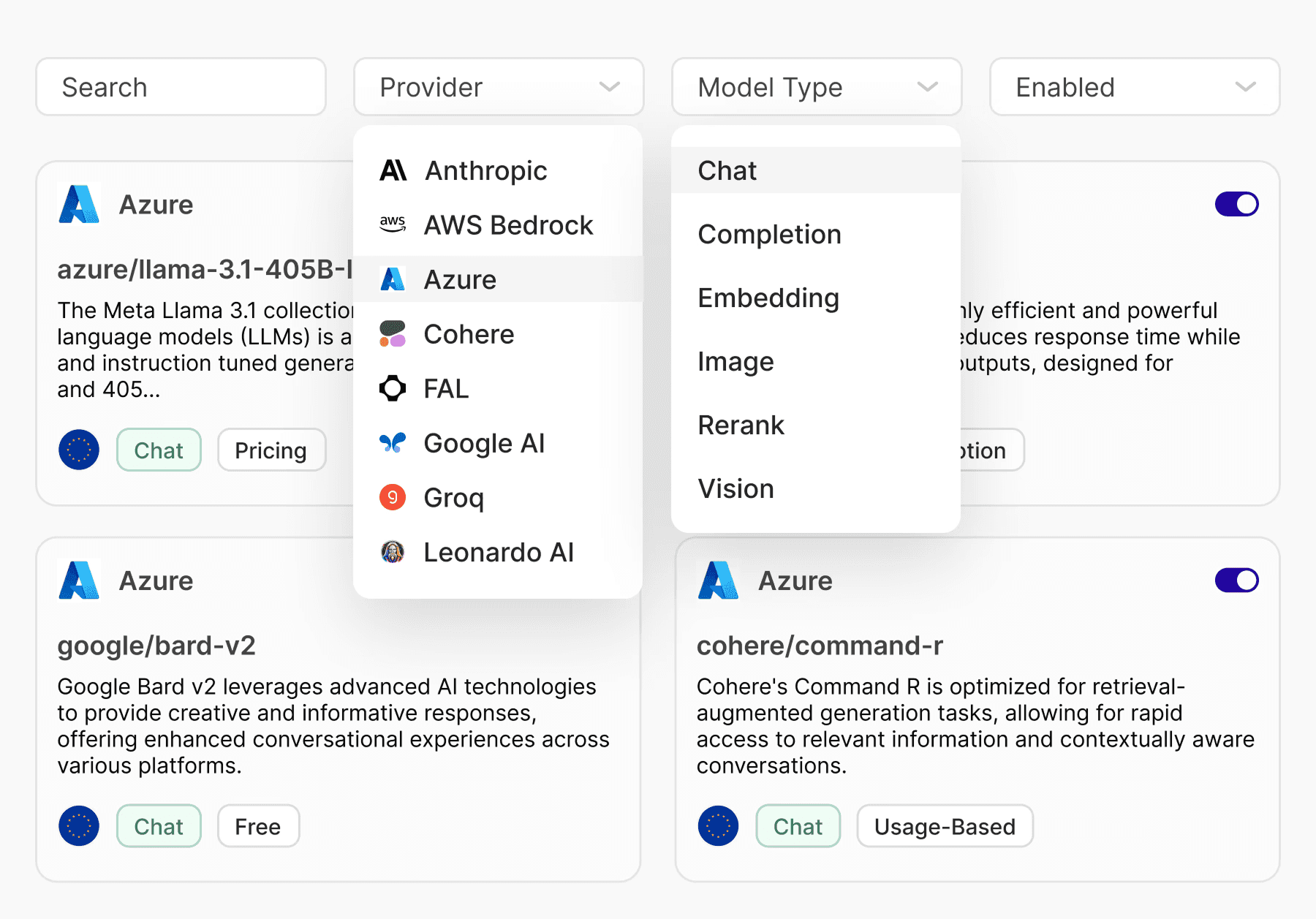

AI Gateway

Seamlessly route your AI across 300+ models. Apply failovers, caching and budget controls.

RAG & context

Data ingestion

Chunking & indexing

Knowledge Base

Rag-as-a-Service for your agents. Focus on your content, we handle all the pipelines.

Logs & traces

Real-time metrics

Alerts & dashboards

Monitoring & Observability

Trace every prompt, token, and tool. Dashboards and alerts catch cost, latency and quality issues early.

Work as one team. Stay in control.

Give Product, Engineering, and Data a shared workspace, backed by the controls Security and Legal expect.

Work as one team. Stay in control.

Give Product, Engineering, and Data a shared workspace, backed by the controls Security and Legal expect.

Work as one team. Stay in control.

Give Product, Engineering, and Data a shared workspace, backed by the controls Security and Legal expect.

Quality & risk standards

Golden sets, checks, and human review make quality measurable and repeatable across teams.

Quality & risk standards

Golden sets, checks, and human review make quality measurable and repeatable across teams.

Quality & risk standards

Golden sets, checks, and human review make quality measurable and repeatable across teams.

Shared workspace

One source of truth for prompts, datasets, and releases. Comments, reviews, and version history built in.

Shared workspace

One source of truth for prompts, datasets, and releases. Comments, reviews, and version history built in.

Shared workspace

One source of truth for prompts, datasets, and releases. Comments, reviews, and version history built in.

Access & ownership

Sign in with your company account. Roles define who can do what; every change is recorded.

Access & ownership

Sign in with your company account. Roles define who can do what; every change is recorded.

Access & ownership

Sign in with your company account. Roles define who can do what; every change is recorded.

Integrates with your stack

Works with major providers and open-source models; popular vector stores & frameworks.

Integrates with your stack

Works with major providers and open-source models; popular vector stores & frameworks.

Integrates with your stack

Works with major providers and open-source models; popular vector stores & frameworks.

The voice of experts

Hear it from the industry leaders

The voice of experts

Hear it from the industry leaders

The voice of experts

Hear it from the industry leaders

“Orq has helped us save enormous amounts of time. Before, it would take us 6 weeks to build a custom-made AI solution for our clients. Now, it’s possible to build it in 2 weeks with Orq.”

Koen Verschuren

Founder

“As our platform started to grow, we needed a tool to help us manage our prompts and also improve our prompt engineering workflow.”

Mantas Urnieza

Co-founder

“We wanted to expand prompt engineering beyond just our developers by bringing in team members with specialized domain expertise. This collaboration enhances our innovation and ensures our AI solutions are top-notch.”

Thomas Goijarts

Founder

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

“Orq has helped us save enormous amounts of time. Before, it would take us 6 weeks to build a custom-made AI solution for our clients. Now, it’s possible to build it in 2 weeks with Orq.”

Koen Verschuren

Founder

“As our platform started to grow, we needed a tool to help us manage our prompts and also improve our prompt engineering workflow.”

Mantas Urnieza

Co-founder

“We wanted to expand prompt engineering beyond just our developers by bringing in team members with specialized domain expertise. This collaboration enhances our innovation and ensures our AI solutions are top-notch.”

Thomas Goijarts

Founder

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

“Orq has helped us save enormous amounts of time. Before, it would take us 6 weeks to build a custom-made AI solution for our clients. Now, it’s possible to build it in 2 weeks with Orq.”

Koen Verschuren

Founder

“As our platform started to grow, we needed a tool to help us manage our prompts and also improve our prompt engineering workflow.”

Mantas Urnieza

Co-founder

“We wanted to expand prompt engineering beyond just our developers by bringing in team members with specialized domain expertise. This collaboration enhances our innovation and ensures our AI solutions are top-notch.”

Thomas Goijarts

Founder

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

Integrations at enterprise scale

Integrations at enterprise scale

Enterprise control tower for security, visibility, and team collaboration.

Enterprise control tower for security, visibility, and team collaboration.