Resources

Resources

Build. Ship. Collaborate.

Make your AI development fast, secure, and collaborative—all from one platform that keeps everything in control.

Build. Ship. Collaborate.

Make your AI development fast, secure, and collaborative—all from one platform that keeps everything in control.

Build. Ship. Collaborate.

Make your AI development fast, secure, and collaborative—all from one platform that keeps everything in control.

Secure by design

European based

Join 100+ AI teams already using Orq.ai to scale complex LLM apps

The platform

How it works

The platform

How it works

The platform

How it works

Runtime

Multi-agent

Tools

Memory Store

MCP

Agent2Agent

Real-time orchestration

Streaming

Guardrails

Agent Runtime

Manage your agent lifecycle. You develop and monitor, Our runtime handles everything else.

Experimentation

Agent Simulation

LLM as a judge

human evals

Agent Evals

RAG evals

Python evals

Datasets

Online evaluation

Golden sets and A/B evals. Human review where risk is high wired into delivery.

Budget control

Model routing

Multi-modality

caching

Fallbacks & retries

Unified API

identity tracking

BYOM

finops

key management

AI Gateway

Seamlessly route your AI across 300+ models. Apply failovers, caching and budget controls.

RAG

agentic rag

Data ingestion

file processing

Chunking

Embedding

retrieval

reranking

rag evals

experimentation

Knowledge Base

Rag-as-a-Service for your agents. Focus on your content, we handle all the pipelines.

Traces

Threads

Real-time dashboards

Alerts

Automations

AI Insights

3rd party integrations

Opentelemetry

Annotations

Feedback

Monitoring & Observability

Trace every prompt, token, and tool. Dashboards and alerts catch cost, latency and quality issues early.

The benefits

See immediate business results

The benefits

See immediate business results

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

0x

0x

faster time‑to‑market

“Orq.ai opened prompt engineering to our non-technical teammates, key to scaling our AI-native platform.”

Mantas Urnieza

Co-founder

0+

0+

agents

“With Orq.ai we cut our custom AI build time from 6 weeks to 2.”

Koen Verschuren

Founder

0+

0+

AI solutions shipped

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

0x

0x

faster time‑to‑market

“Orq.ai opened prompt engineering to our non-technical teammates, key to scaling our AI-native platform.”

Mantas Urnieza

Co-founder

0+

0+

agents

“With Orq.ai we cut our custom AI build time from 6 weeks to 2.”

Koen Verschuren

Founder

0+

0+

AI solutions shipped

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

0x

0x

faster time‑to‑market

“Orq.ai opened prompt engineering to our non-technical teammates, key to scaling our AI-native platform.”

Mantas Urnieza

Co-founder

0+

0+

agents

“With Orq.ai we cut our custom AI build time from 6 weeks to 2.”

Koen Verschuren

Founder

0+

0+

AI solutions shipped

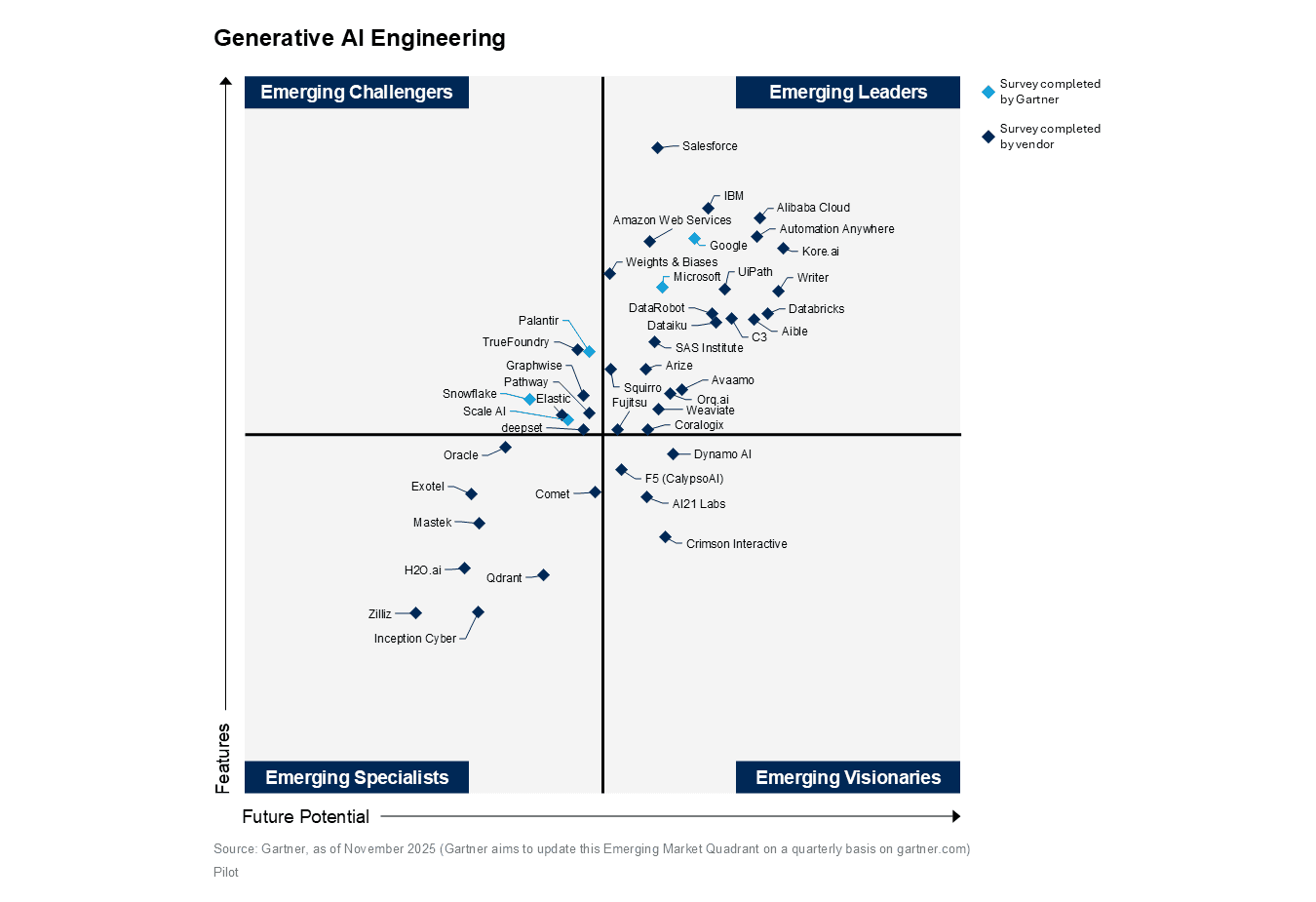

THIRD-PARTY RESEARCH

Gartner® recognition

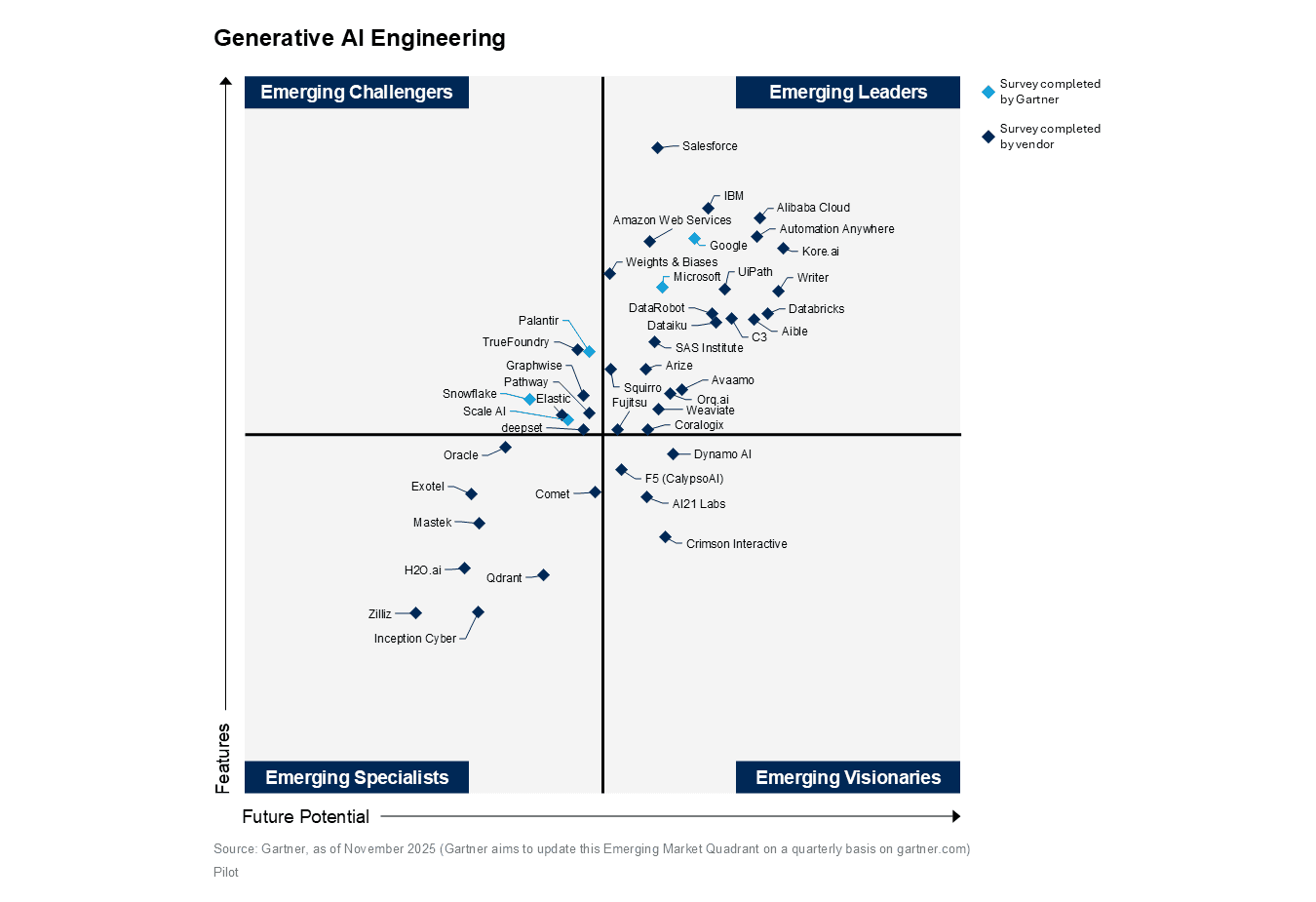

THIRD-PARTY RESEARCH

Gartner® recognition

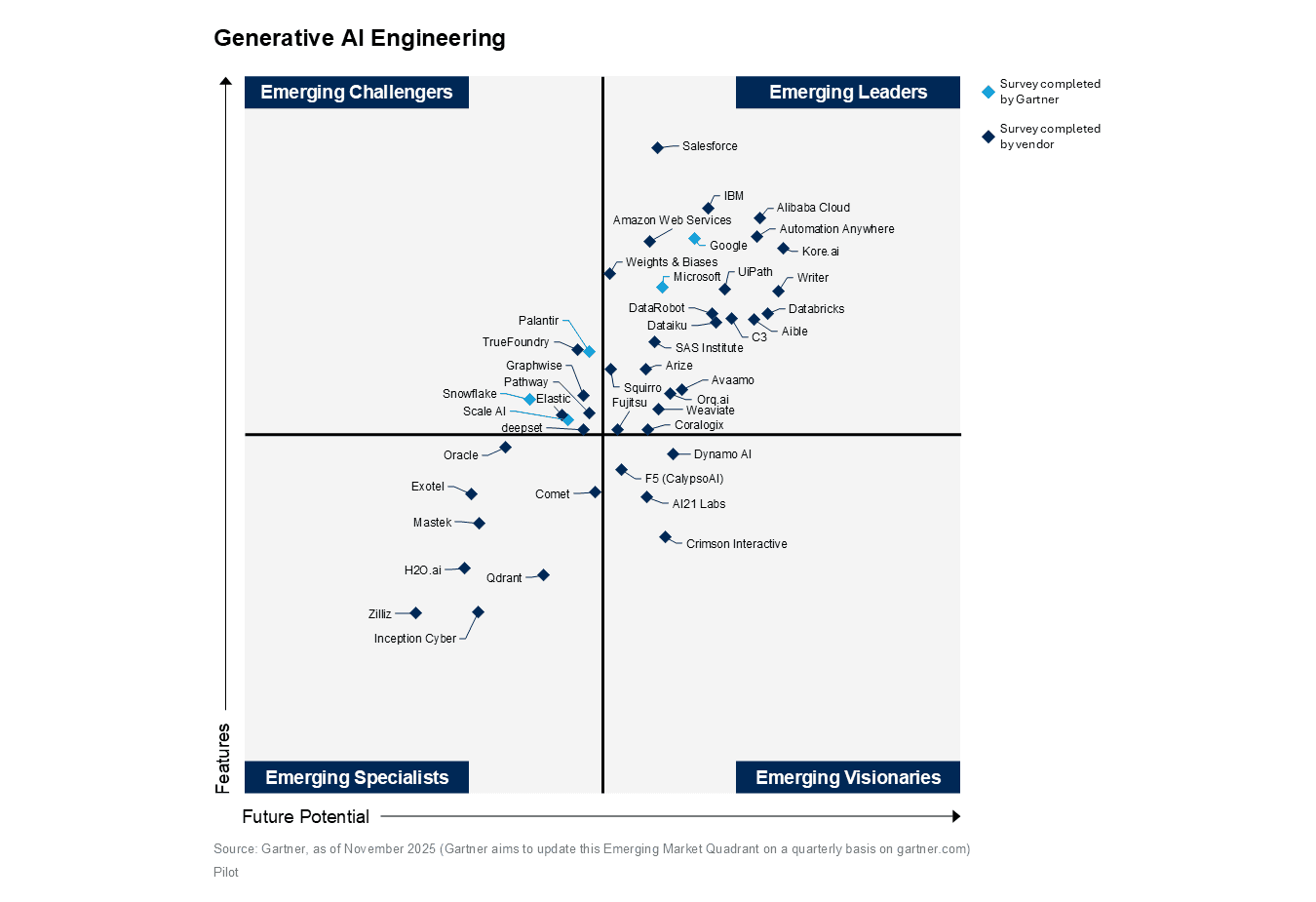

THIRD-PARTY RESEARCH

Gartner® recognition

Analyst Coverage

Included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering

Orq.ai has been included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering (2025), recognizing our vision for building and operating production-grade GenAI systems. We are building infrastructure for teams that need reliability and control as GenAI moves from experimentation into production.

Analyst Coverage

Included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering

Orq.ai has been included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering (2025), recognizing our vision for building and operating production-grade GenAI systems. We are building infrastructure for teams that need reliability and control as GenAI moves from experimentation into production.

Analyst Coverage

Included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering

Orq.ai has been included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering (2025), recognizing our vision for building and operating production-grade GenAI systems. We are building infrastructure for teams that need reliability and control as GenAI moves from experimentation into production.

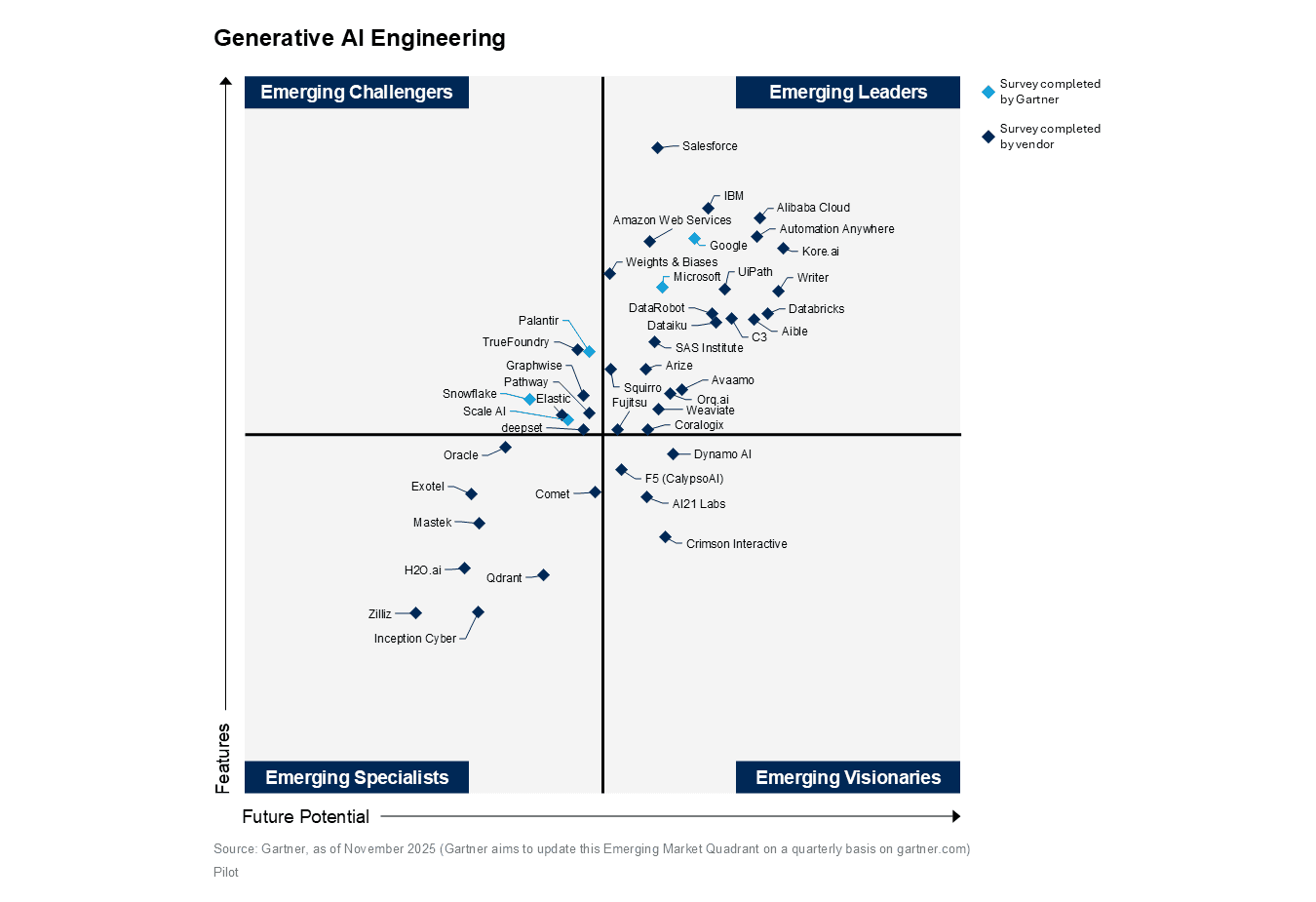

Analyst Coverage

Included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering

Orq.ai has been included in the Gartner® Emerging Leaders Quadrant for Generative AI Engineering (2025), recognizing our vision for building and operating production-grade GenAI systems. We are building infrastructure for teams that need reliability and control as GenAI moves from experimentation into production.

Emerging Leaders

GenAI Engineering

MADE FOR COLLABORATION

Why teams switch

MADE FOR COLLABORATION

Why teams switch

MADE FOR COLLABORATION

Why teams switch

Speed

Build & ship 5x faster

Move 5x faster from concept to production with unified workflows and fast evals. Ship quickly without fragile glue code.

Speed

Build & ship 5x faster

Move 5x faster from concept to production with unified workflows and fast evals. Ship quickly without fragile glue code.

Speed

Build & ship 5x faster

Move 5x faster from concept to production with unified workflows and fast evals. Ship quickly without fragile glue code.

Reliability

Stay in control

Keep quality and costs predictable with guardrails, canaries, and rollbacks. Dashboards and alerts catch issues early.

Reliability

Stay in control

Keep quality and costs predictable with guardrails, canaries, and rollbacks. Dashboards and alerts catch issues early.

Reliability

Stay in control

Keep quality and costs predictable with guardrails, canaries, and rollbacks. Dashboards and alerts catch issues early.

Collaboration

Work as one team

Use one shared workspace with roles, reviews, and HITL built in. One source of truth for prompts, datasets, and releases.

Collaboration

Work as one team

Use one shared workspace with roles, reviews, and HITL built in. One source of truth for prompts, datasets, and releases.

Collaboration

Work as one team

Use one shared workspace with roles, reviews, and HITL built in. One source of truth for prompts, datasets, and releases.

Deploy anywhere. Integrate with everything.

Works with leading LLMs and providers; bring your own models. Deploy cloud, hybrid, or on‑prem with enterprise security and EU compliance.

Deploy anywhere. Integrate with everything.

Works with leading LLMs and providers; bring your own models. Deploy cloud, hybrid, or on‑prem with enterprise security and EU compliance.

Deploy anywhere. Integrate with everything.

Works with leading LLMs and providers; bring your own models. Deploy cloud, hybrid, or on‑prem with enterprise security and EU compliance.

Data Residency

Keep data in the EU or the region you choose. Set how long we keep it and automatically mask sensitive fields.

Data Residency

Keep data in the EU or the region you choose. Set how long we keep it and automatically mask sensitive fields.

Data Residency

Keep data in the EU or the region you choose. Set how long we keep it and automatically mask sensitive fields.

Enterprise

Sign in with your company account. Control who can do what. Every change is logged, and we provide standard compliance paperwork.

Enterprise

Sign in with your company account. Control who can do what. Every change is logged, and we provide standard compliance paperwork.

Enterprise

Sign in with your company account. Control who can do what. Every change is logged, and we provide standard compliance paperwork.

Deployment methods

Our cloud, your cloud, or your servers. Private connections supported. Roll out safely and roll back fast.

Deployment methods

Our cloud, your cloud, or your servers. Private connections supported. Roll out safely and roll back fast.

Deployment methods

Our cloud, your cloud, or your servers. Private connections supported. Roll out safely and roll back fast.

The voice of customers

Hear it from the industry leaders

The voice of customers

Hear it from the industry leaders

The voice of customers

Hear it from the industry leaders

"Before Orq.ai, our team relied on Excel sheets and custom scripts, a lot of manual work that slowed us down. Now we can ship new features much faster, especially for complex use cases like voicebots. The real value is in the speed and ease of testing; it multiplies our output and gives us room to be creative."

Timo Verbeek

GenAI Engineer

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

“We wanted to expand prompt engineering beyond just our developers by bringing in team members with specialized domain expertise. This collaboration enhances our innovation and ensures our AI solutions are top-notch.”

Thomas Goijarts

Founder

“As our platform started to grow, we needed a tool to help us manage our prompts and also improve our prompt engineering workflow.”

Mantas Urnieza

Co-founder

“Orq has helped us save enormous amounts of time. Before, it would take us 6 weeks to build a custom-made AI solution for our clients. Now, it’s possible to build it in 2 weeks with Orq.”

Koen Verschuren

Founder

"Before Orq.ai, our team relied on Excel sheets and custom scripts, a lot of manual work that slowed us down. Now we can ship new features much faster, especially for complex use cases like voicebots. The real value is in the speed and ease of testing; it multiplies our output and gives us room to be creative."

Timo Verbeek

GenAI Engineer

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

“We wanted to expand prompt engineering beyond just our developers by bringing in team members with specialized domain expertise. This collaboration enhances our innovation and ensures our AI solutions are top-notch.”

Thomas Goijarts

Founder

“As our platform started to grow, we needed a tool to help us manage our prompts and also improve our prompt engineering workflow.”

Mantas Urnieza

Co-founder

“Orq has helped us save enormous amounts of time. Before, it would take us 6 weeks to build a custom-made AI solution for our clients. Now, it’s possible to build it in 2 weeks with Orq.”

Koen Verschuren

Founder

"Before Orq.ai, our team relied on Excel sheets and custom scripts, a lot of manual work that slowed us down. Now we can ship new features much faster, especially for complex use cases like voicebots. The real value is in the speed and ease of testing; it multiplies our output and gives us room to be creative."

Timo Verbeek

GenAI Engineer

“We no longer have to build this whole other product to orchestrate LLMs – Orq.ai does that for us.”

Kyle Kinsey

Founding Engineering

“We wanted to expand prompt engineering beyond just our developers by bringing in team members with specialized domain expertise. This collaboration enhances our innovation and ensures our AI solutions are top-notch.”

Thomas Goijarts

Founder

“As our platform started to grow, we needed a tool to help us manage our prompts and also improve our prompt engineering workflow.”

Mantas Urnieza

Co-founder

“Orq has helped us save enormous amounts of time. Before, it would take us 6 weeks to build a custom-made AI solution for our clients. Now, it’s possible to build it in 2 weeks with Orq.”

Koen Verschuren

Founder