Resources

Resources

Large Language Models

Top 5 Humanloop Alternatives in 2025

Looking for Humanloop alternatives? Discover top platforms for building, evaluating, and scaling LLM-based applications.

July 22, 2025

Reginald Martyr

Marketing Manager

Key Takeaways

As teams scale their GenAI applications, finding the right LLMOps platform is more important than ever.

Several strong alternatives offer better support for observability, orchestration, and cross-functional collaboration.

Choosing the right platform depends on your team’s goals, whether it’s prompt optimization, agent orchestration, or improving LLM system reliability.

Bring LLM-powered apps from prototype to production

Discover a collaborative platform where teams work side-by-side to deliver LLM apps safely.

Bring LLM-powered apps from prototype to production

Discover a collaborative platform where teams work side-by-side to deliver LLM apps safely.

Whether you're building AI-powered agents, experimenting with LLM apps, or scaling your AI infrastructure, operational tooling is no longer optional: it’s foundational. As teams move from prototypes to production, the need for robust systems to monitor, evaluate, and iterate on AI workflows has never been greater.

Humanloop has emerged in recent years as a go-to solution for managing LLM workflows, prompt tuning, and evaluation. But with Humanloop officially sunsetting its platform in September 2025, many teams are now evaluating alternatives that can meet their evolving needs in AI agent and application development.

In this blog post, we explore five leading Humanloop alternatives to help your team find the right tool for building, scaling, and evaluating AI systems.

Top 5 Humanloop Alternatives

1. Orq.ai

Evaluators & Guardrails Configuration in Orq.ai

Orq.ai is a Generative AI Collaboration Platform where software teams operate agentic systems at scale. Launched in early 2024, Orq.ai helps AI teams deliver LLM-based applications by filling the gaps traditional DevOps tools weren’t built to handle, namely the need for cross-collaboration between engineers, product teams, business experts.

In a nutshell, Orq.ai enables teams to experiment with GenAI use cases, deploy them to production, and monitor performance, all in one platform. Whether you're building AI copilots, multi-step workflows, or domain-specific agents, Orq.ai enables technical and non-technical contributors to work together seamlessly across the full AI application development lifecycle.

Here's an overview of our platform's core capabilities:

Prompt Engineering & Experimentation: Compare LLM and prompt configurations at scale using our library of evaluators. The platform also supports testing RAG pipelines and running offline evaluations to fine-tune performance before deploying to production.

Serverless Orchestration: With Orq.ai, you can run agentic workflows serverlessly without deploying your backend infrastructure. The platform supports version-controlled templates, logic branches, and parameterized flows, while also offering hosted runtimes that enable built-in routing, error handling, and fallback logic.

Observability & Monitoring: Orq.ai delivers full tracing, session replays, and anomaly detection so teams can gain real visibility into how their systems perform. We also allow you to track usage, cost, latency, and model behavior over time while providing built-in regression testing and debugging tools to help teams troubleshoot issues efficiently.

AI Gateway: You can connect to multiple LLM providers through a single, unified API, making it easy to centralize your model access. Orq.ai also supports provider routing, caching, and cost management, and includes features for data residency, audit logging, and role-based access control.

RAG-as-a-Service: Orq.ai offers RAG-as-a-Service pipelines out of the box. Teams can upload documents with minimal setup, use built-in embedding models, and manage their knowledge bases directly through the API.

Why Choose Orq.ai?

Orq.ai is a solid choice for teams looking to scale AI agent development beyond prototypes. Our end-to-end platform supports everything from experimentation to production monitoring, all while promoting cross-functional collaboration.

Book a demo with our team or create a free account to explore our platform today.

Langsmith

Credits: Langsmith

LangSmith is an observability and evaluation platform built by the creators of LangChain, designed to help developers debug, test, and monitor LLM-powered applications. Tailored specifically for LangChain-based projects, LangSmith makes it easier for teams to track and refine their agents, chains, and prompts throughout the development lifecycle. For teams already committed to the LangChain ecosystem, LangSmith is a natural fit that enhances transparency and control over LLM behavior.

Here's a quick look at Langsmith's main platform capabilities:

Integrated Tracing for LangChain Workflows: LangSmith provides detailed tracing capabilities for LangChain agents and chains, making it easy to visualize and debug multi-step workflows. Developers can inspect each step in the execution pipeline, including inputs, outputs, and intermediate tool calls.

Prompt Versioning and Testing: LangSmith enables prompt versioning and comparison, allowing teams to track changes and evaluate how different prompt iterations affect model outputs. This feature helps with systematic prompt tuning and model behavior analysis.

Evaluation and Feedback Tools: The platform supports structured and unstructured evaluations, including test dataset runs and human feedback collection. This makes it easier to assess performance over time and detect regressions as prompts or chains evolve.

Production Monitoring and Deployment Readiness: LangSmith supports production-grade monitoring with built-in visibility into application health, including response times, performance metrics, and user interactions. This makes it easier for teams to confidently deploy and operate LLM applications at scale.

Metadata Tagging and Custom Metrics: LangSmith allows developers to tag runs with metadata and define custom metrics, giving teams a flexible way to organize and analyze their data across experiments.

API Access and Hosted Platform: LangSmith offers a hosted platform with programmatic access to runs, traces, and evaluation data via API. This enables teams to integrate LangSmith insights into their broader development and monitoring workflows.

Why Choose LangSmith?

LangSmith is a logical choice for teams deeply invested in the LangChain framework and looking for robust tools to monitor and evaluate LLM workflows. Its native integration with LangChain makes it easy to instrument chains and agents without additional overhead. However, for teams building outside the LangChain ecosystem or seeking a broader platform for workflow orchestration, deployment, or RAG capabilities, LangSmith may feel limited in scope. It excels at observability and evaluation but is not intended as a full-stack solution for AI agent development or deployment at scale.

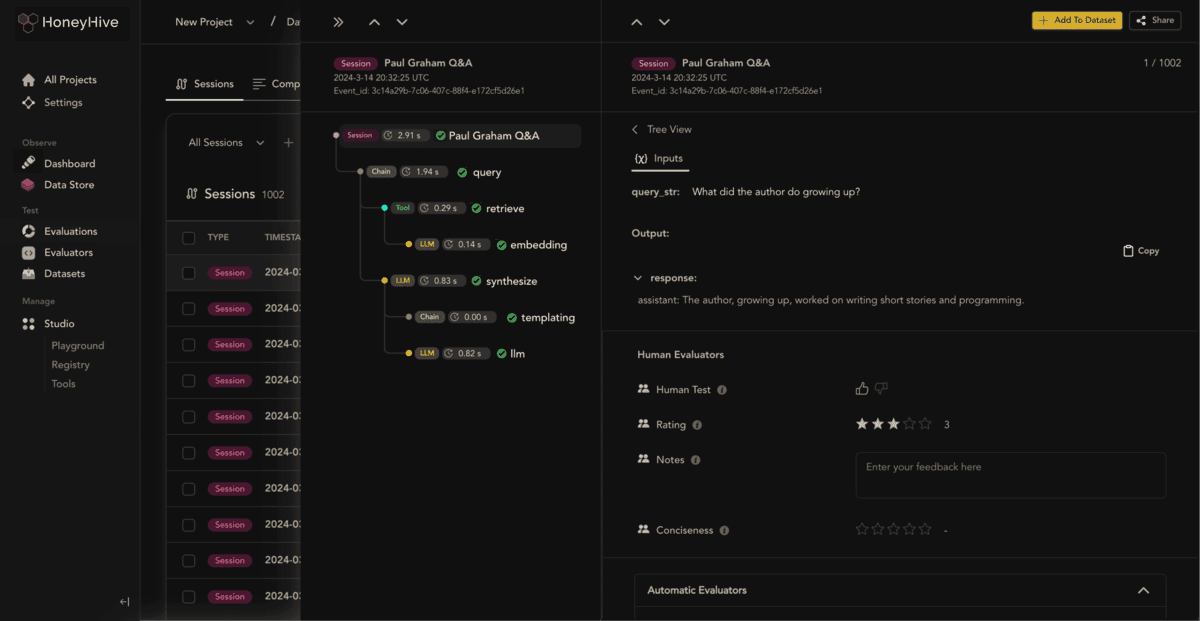

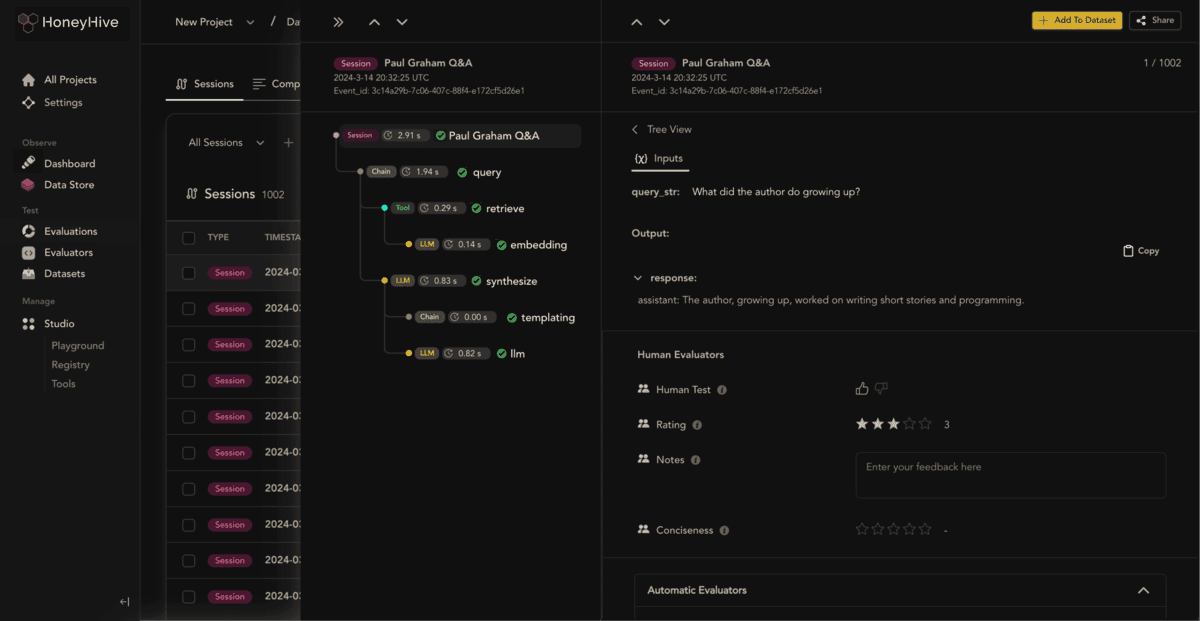

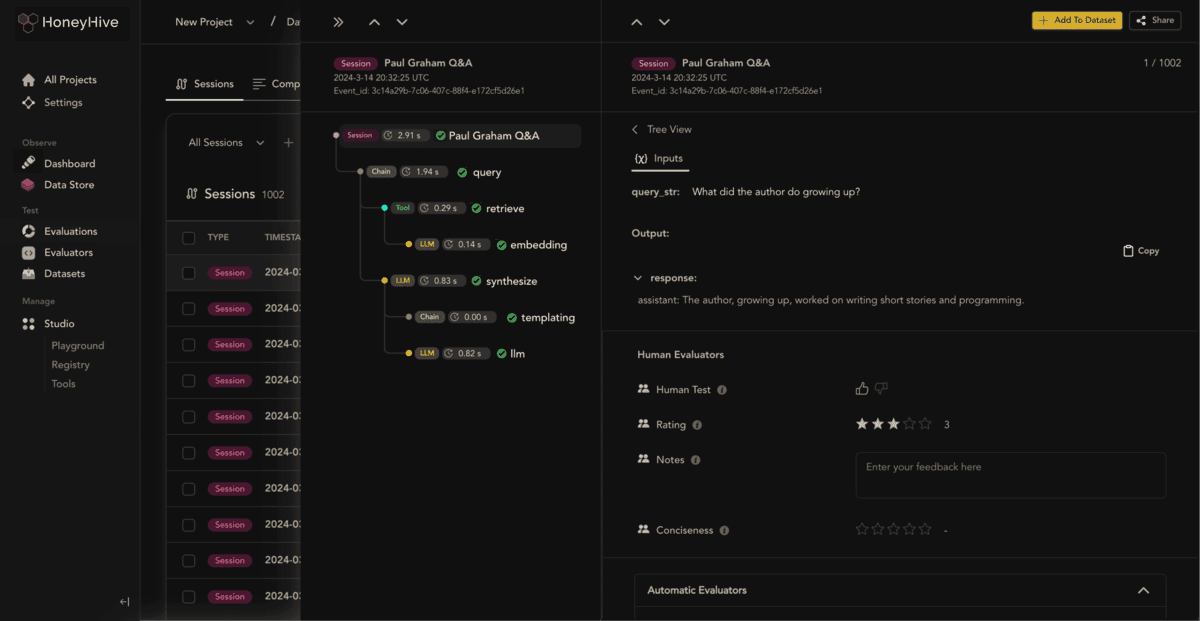

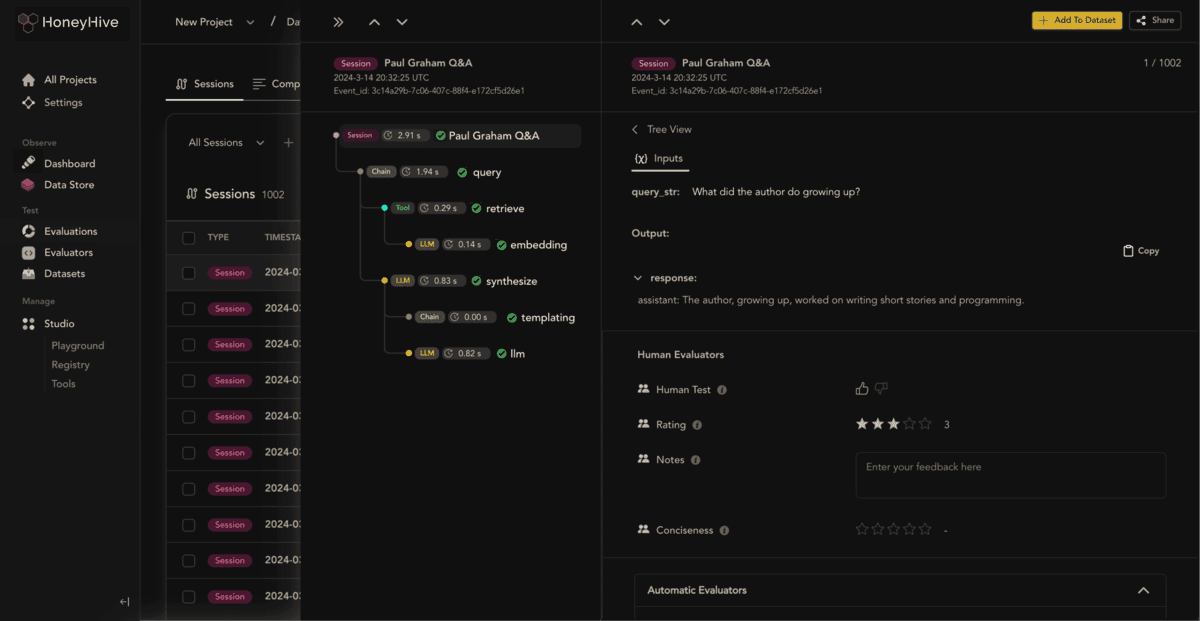

3. Honeyhive

Credits: Honeyhive

Honeyhive is an observability and evaluation platform built to support cross-functional teams building and scaling AI agents and LLM applications. It provides a unified workflow for constructing, testing, debugging, and monitoring Generative AI systems, making it a strong contender in the emerging LLMOps space.

Key features include:

Experimentation and benchmarking: Honeyhive enables you to run structured experiments with custom benchmarks, automating evaluations across prompts, models, and entire agent pipelines. You can track regressions, assign human scores, curate golden datasets, and integrate these checks into CI workflows.

Distributed Tracing & Session Replay: With its OpenTelemetry-based tracer, Honeyhive provides in-depth visibility into application execution. You can inspect distributed traces, custom spans, and session replays to pinpoint failures and performance bottlenecks in multi-step LLM pipelines.

Monitoring, Alerting & Analytics: Honeyhive supports asynchronous logging, custom metrics, and live alerts for production environments. Teams can build custom dashboards, segment data using metadata, and monitor RAG and agent pipelines in real time to catch anomalies and user feedback trends.

Built-In Evaluators & Custom Metrics: The platform provides a library of evaluators, including code-based, LLM-based, and human scoring tools. You can also define custom metrics and embed them directly into tracing spans or evaluation workflows.

Why Choose HoneyHive?

Honeyhive is ideal for teams that require a robust, developer-grade LLMOps stack. It goes far beyond simple prompt testing, offering enterprise-grade observability, automated testing, live monitoring, and feedback annotation. That said, while the platform offers powerful tooling, teams might still need to pair it with orchestration or RAG-specific platforms to handle end-to-end AI agent development workflows.

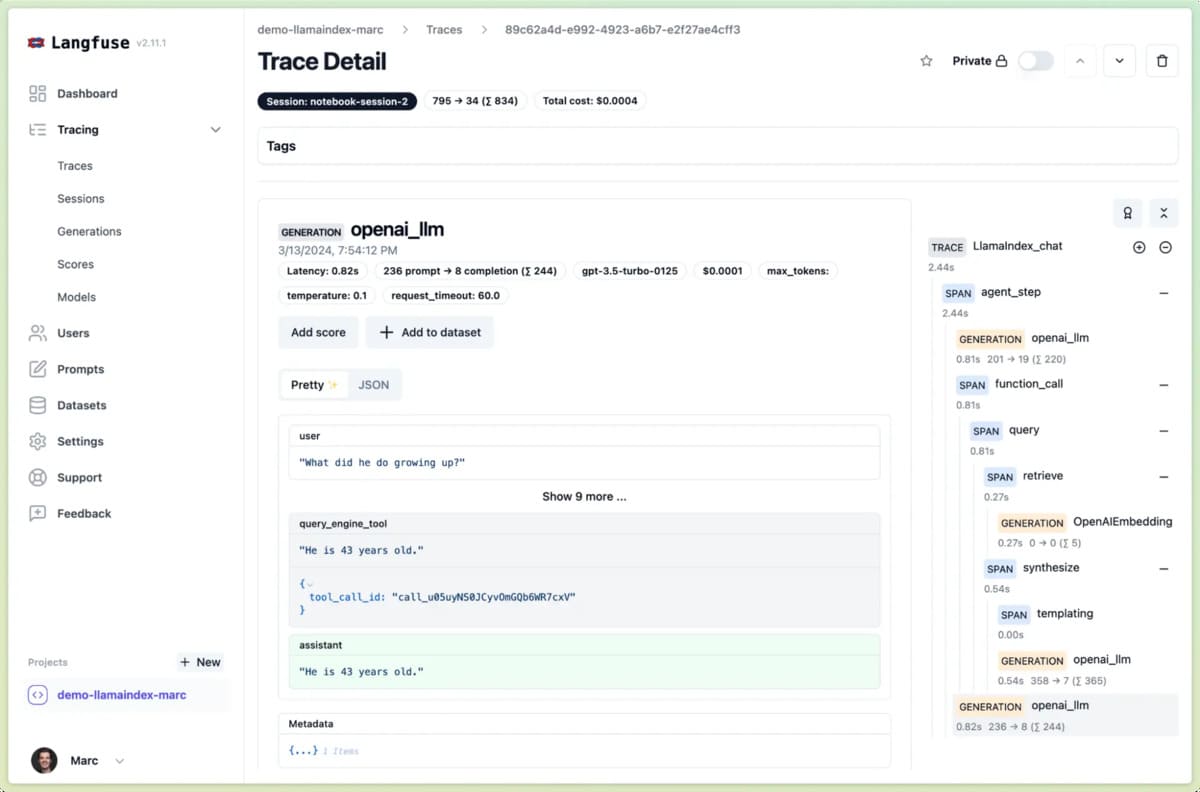

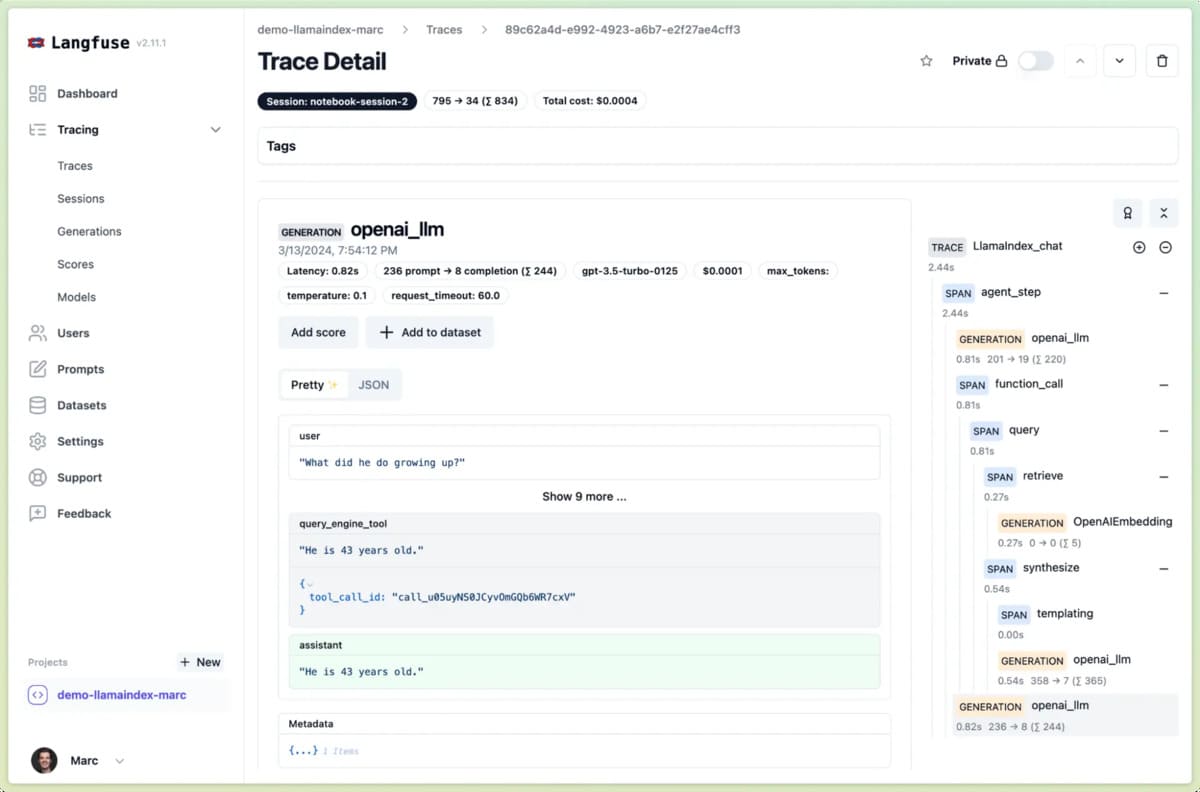

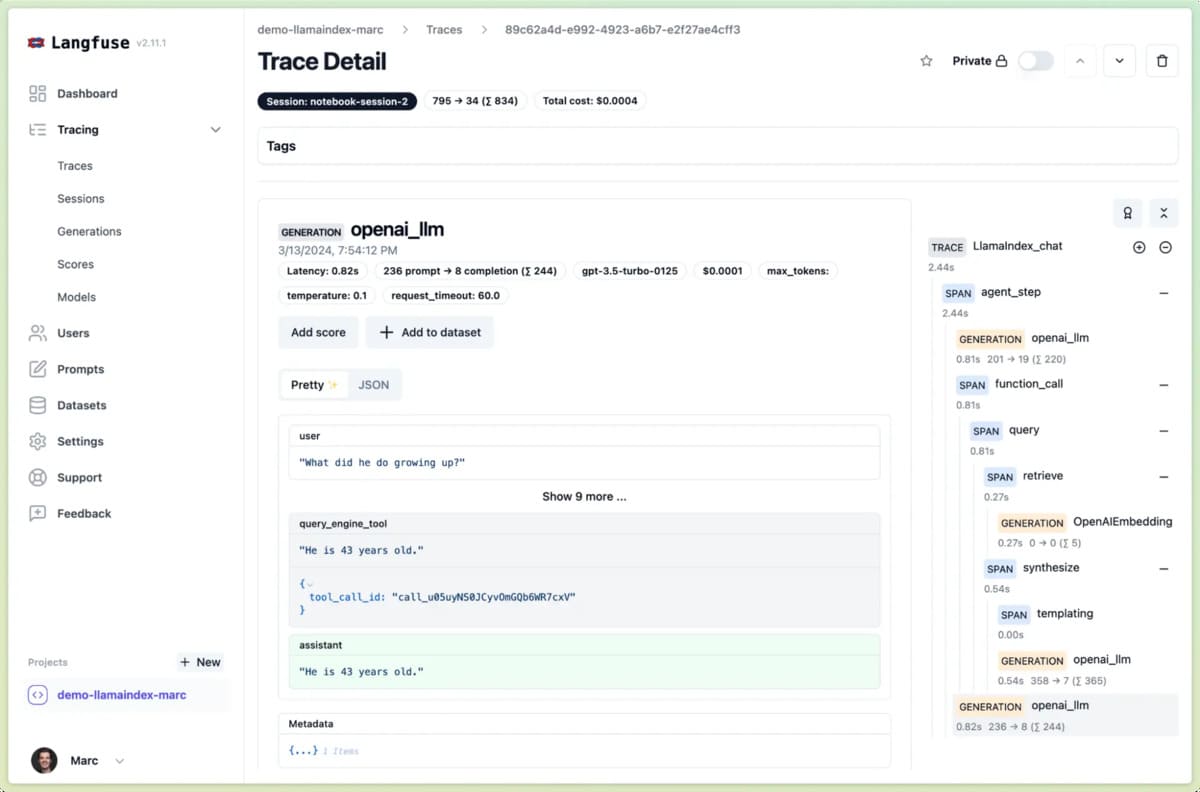

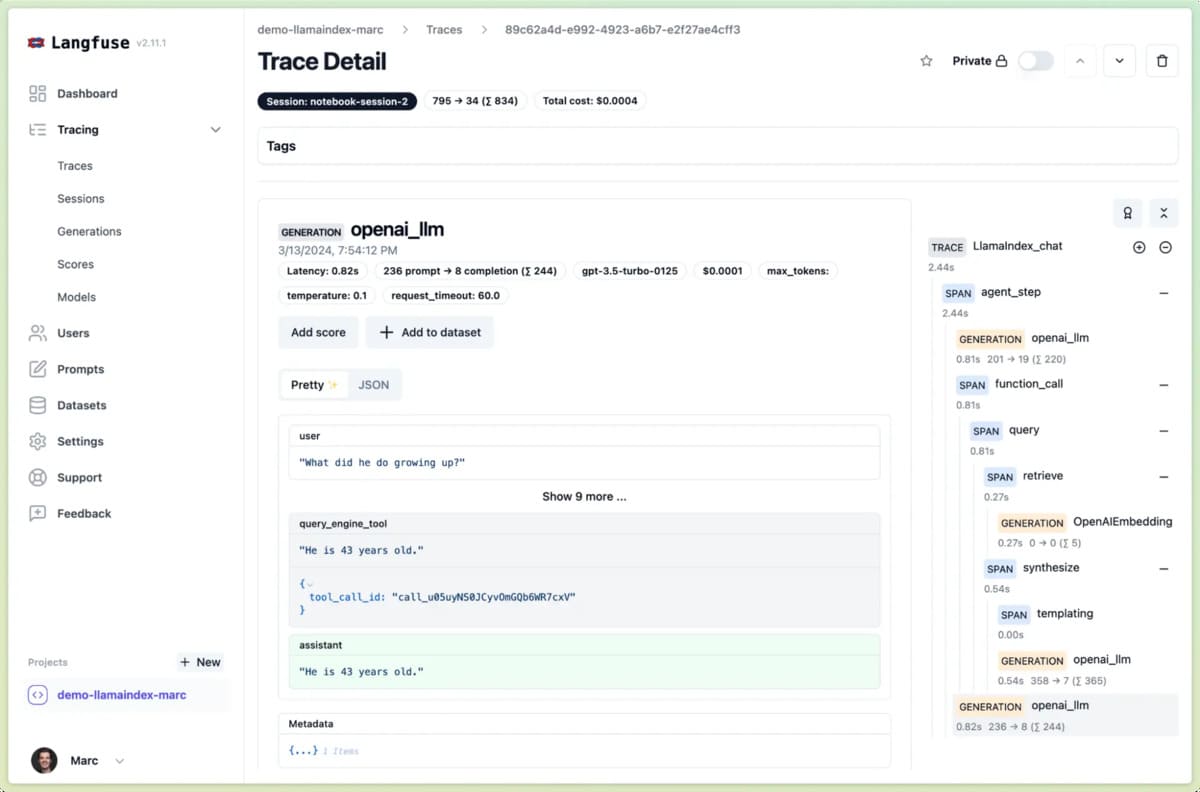

4. Langfuse

Credits: Langfuse

Langfuse is an open-source observability and evaluation platform purpose-built for LLM applications. Originally focused on helping developers monitor, trace, and evaluate large language model outputs, Langfuse has recently begun expanding into more end-to-end capabilities. However, its core strength remains in providing detailed visibility into LLM behavior, making it a top contender among teams that prioritize monitoring, performance tracking, and continuous evaluation.

Here are some of Langfuse's key features:

Real-Time Tracing and Debugging: Langfuse offers detailed execution traces that allow developers to inspect each step of an LLM interaction, including inputs, outputs, tool calls, and metadata. This tracing capability makes it easier to understand model behavior and debug unexpected results.

Evaluation and Feedback Collection: The platform supports structured evaluations through test datasets, programmatic scoring, and human feedback. This helps teams assess how models are performing over time and validate improvements or changes across different versions.

Custom Metadata, Metrics, and Tagging: Langfuse enables teams to track custom metrics and tag sessions for deeper analytics. This flexibility supports nuanced performance monitoring across different user segments or application flows.

Hosted and Self-Hosted Options: Langfuse can be used as a hosted service or deployed in a self-hosted environment, giving teams control over data and infrastructure based on their privacy and security needs.

Why Choose Langfuse?

Langfuse is an excellent choice for teams that want deep visibility into their LLM-powered apps. Its robust observability features and flexible deployment options make it particularly appealing for developers working in production environments who need to monitor performance closely. That said, for teams looking for a more holistic platform to handle orchestration, prompt iteration, or AI agent development workflows end-to-end, Langfuse may need to be supplemented with additional tools.

Langbase

Credits: Langbase

Langbase is a serverless platform designed for developers building intelligent AI agents with long-term memory, semantic retrieval, and advanced logic handling. While it began as a RAG-focused tool, Langbase has evolved into a broader infrastructure platform for building and scaling AI agents via APIs without requiring teams to manage vector storage, servers, or orchestration layers themselves.

With a sharp focus on developer experience and performance, Langbase enables fast iteration and high scalability for teams who want to stay close to the metal when building AI application development workflows.

Key features:

Serverless Agent Infrastructure: Langbase offers a serverless API for deploying and scaling AI agents. Developers define the agent logic while Langbase handles the execution, scaling, and infrastructure, making it easy to move from prototype to production without provisioning servers or writing deployment scripts.

Memory Agents: At the core of Langbase are memory agents, AI agents capable of retaining context and knowledge over time. These agents are automatically connected to a long-term memory layer, eliminating the need to manually configure vector stores or context windows.

Support for Multiple LLM Providers: Langbase integrates with a wide range of LLM providers and models. This gives teams the flexibility to route requests to different models based on performance, cost, or task-specific behavior.

Semantic Retrieval & Intelligent RAG: Langbase offers strong RAG capabilities, with built-in reranking and semantic search. Developers can connect agents to custom knowledge bases without managing document pipelines or embedding infrastructure manually.

Advanced Agentic Routing and Re-Ranking: Langbase provides intelligent routing for agent queries, allowing for more accurate and relevant outputs. The platform includes reranking features to ensure that retrieved information is optimized before reaching the LLM.

Why Choose Langbase?

Langbase is a strong option for developer-centric teams that want maximum control and efficiency when building AI agents. Its serverless architecture and memory-driven agents make it well-suited for technical users who prioritize performance and scalability. However, the platform’s minimal UI and API-first design may limit collaboration with product managers, QA, or domain experts. Cross-functional teams looking for a more collaborative, end-to-end development environment may need to supplement Langbase with other tools to round out the broader GenAI lifecycle.

Humanloop Alternatives: Key Takeaways

As the sun sets on Humanloop in September 2025, many teams are taking the opportunity to reassess their LLM tooling needs, and for good reason. Whether you're optimizing prompts, scaling agent workflows, or enhancing observability, there’s no one-size-fits-all solution. The right platform depends on your team's technical maturity, collaboration needs, and production goals.

If your focus is developer-centric observability, platforms like Langsmith, Langfuse, and Honeyhive offer robust tools for tracing, evaluation, and debugging LLM workflows. Langbase caters to more technical teams seeking precise control over agent memory and infrastructure via streamlined APIs.

But if your team needs an end-to-end platform that brings together experimentation, orchestration, observability, RAG, and cross-functional collaboration in one place, Orq.ai is built for you.

Book a demo with our team or create a free account to explore how Orq.ai can help you take your GenAI systems from prototype to production and beyond.

Whether you're building AI-powered agents, experimenting with LLM apps, or scaling your AI infrastructure, operational tooling is no longer optional: it’s foundational. As teams move from prototypes to production, the need for robust systems to monitor, evaluate, and iterate on AI workflows has never been greater.

Humanloop has emerged in recent years as a go-to solution for managing LLM workflows, prompt tuning, and evaluation. But with Humanloop officially sunsetting its platform in September 2025, many teams are now evaluating alternatives that can meet their evolving needs in AI agent and application development.

In this blog post, we explore five leading Humanloop alternatives to help your team find the right tool for building, scaling, and evaluating AI systems.

Top 5 Humanloop Alternatives

1. Orq.ai

Evaluators & Guardrails Configuration in Orq.ai

Orq.ai is a Generative AI Collaboration Platform where software teams operate agentic systems at scale. Launched in early 2024, Orq.ai helps AI teams deliver LLM-based applications by filling the gaps traditional DevOps tools weren’t built to handle, namely the need for cross-collaboration between engineers, product teams, business experts.

In a nutshell, Orq.ai enables teams to experiment with GenAI use cases, deploy them to production, and monitor performance, all in one platform. Whether you're building AI copilots, multi-step workflows, or domain-specific agents, Orq.ai enables technical and non-technical contributors to work together seamlessly across the full AI application development lifecycle.

Here's an overview of our platform's core capabilities:

Prompt Engineering & Experimentation: Compare LLM and prompt configurations at scale using our library of evaluators. The platform also supports testing RAG pipelines and running offline evaluations to fine-tune performance before deploying to production.

Serverless Orchestration: With Orq.ai, you can run agentic workflows serverlessly without deploying your backend infrastructure. The platform supports version-controlled templates, logic branches, and parameterized flows, while also offering hosted runtimes that enable built-in routing, error handling, and fallback logic.

Observability & Monitoring: Orq.ai delivers full tracing, session replays, and anomaly detection so teams can gain real visibility into how their systems perform. We also allow you to track usage, cost, latency, and model behavior over time while providing built-in regression testing and debugging tools to help teams troubleshoot issues efficiently.

AI Gateway: You can connect to multiple LLM providers through a single, unified API, making it easy to centralize your model access. Orq.ai also supports provider routing, caching, and cost management, and includes features for data residency, audit logging, and role-based access control.

RAG-as-a-Service: Orq.ai offers RAG-as-a-Service pipelines out of the box. Teams can upload documents with minimal setup, use built-in embedding models, and manage their knowledge bases directly through the API.

Why Choose Orq.ai?

Orq.ai is a solid choice for teams looking to scale AI agent development beyond prototypes. Our end-to-end platform supports everything from experimentation to production monitoring, all while promoting cross-functional collaboration.

Book a demo with our team or create a free account to explore our platform today.

Langsmith

Credits: Langsmith

LangSmith is an observability and evaluation platform built by the creators of LangChain, designed to help developers debug, test, and monitor LLM-powered applications. Tailored specifically for LangChain-based projects, LangSmith makes it easier for teams to track and refine their agents, chains, and prompts throughout the development lifecycle. For teams already committed to the LangChain ecosystem, LangSmith is a natural fit that enhances transparency and control over LLM behavior.

Here's a quick look at Langsmith's main platform capabilities:

Integrated Tracing for LangChain Workflows: LangSmith provides detailed tracing capabilities for LangChain agents and chains, making it easy to visualize and debug multi-step workflows. Developers can inspect each step in the execution pipeline, including inputs, outputs, and intermediate tool calls.

Prompt Versioning and Testing: LangSmith enables prompt versioning and comparison, allowing teams to track changes and evaluate how different prompt iterations affect model outputs. This feature helps with systematic prompt tuning and model behavior analysis.

Evaluation and Feedback Tools: The platform supports structured and unstructured evaluations, including test dataset runs and human feedback collection. This makes it easier to assess performance over time and detect regressions as prompts or chains evolve.

Production Monitoring and Deployment Readiness: LangSmith supports production-grade monitoring with built-in visibility into application health, including response times, performance metrics, and user interactions. This makes it easier for teams to confidently deploy and operate LLM applications at scale.

Metadata Tagging and Custom Metrics: LangSmith allows developers to tag runs with metadata and define custom metrics, giving teams a flexible way to organize and analyze their data across experiments.

API Access and Hosted Platform: LangSmith offers a hosted platform with programmatic access to runs, traces, and evaluation data via API. This enables teams to integrate LangSmith insights into their broader development and monitoring workflows.

Why Choose LangSmith?

LangSmith is a logical choice for teams deeply invested in the LangChain framework and looking for robust tools to monitor and evaluate LLM workflows. Its native integration with LangChain makes it easy to instrument chains and agents without additional overhead. However, for teams building outside the LangChain ecosystem or seeking a broader platform for workflow orchestration, deployment, or RAG capabilities, LangSmith may feel limited in scope. It excels at observability and evaluation but is not intended as a full-stack solution for AI agent development or deployment at scale.

3. Honeyhive

Credits: Honeyhive

Honeyhive is an observability and evaluation platform built to support cross-functional teams building and scaling AI agents and LLM applications. It provides a unified workflow for constructing, testing, debugging, and monitoring Generative AI systems, making it a strong contender in the emerging LLMOps space.

Key features include:

Experimentation and benchmarking: Honeyhive enables you to run structured experiments with custom benchmarks, automating evaluations across prompts, models, and entire agent pipelines. You can track regressions, assign human scores, curate golden datasets, and integrate these checks into CI workflows.

Distributed Tracing & Session Replay: With its OpenTelemetry-based tracer, Honeyhive provides in-depth visibility into application execution. You can inspect distributed traces, custom spans, and session replays to pinpoint failures and performance bottlenecks in multi-step LLM pipelines.

Monitoring, Alerting & Analytics: Honeyhive supports asynchronous logging, custom metrics, and live alerts for production environments. Teams can build custom dashboards, segment data using metadata, and monitor RAG and agent pipelines in real time to catch anomalies and user feedback trends.

Built-In Evaluators & Custom Metrics: The platform provides a library of evaluators, including code-based, LLM-based, and human scoring tools. You can also define custom metrics and embed them directly into tracing spans or evaluation workflows.

Why Choose HoneyHive?

Honeyhive is ideal for teams that require a robust, developer-grade LLMOps stack. It goes far beyond simple prompt testing, offering enterprise-grade observability, automated testing, live monitoring, and feedback annotation. That said, while the platform offers powerful tooling, teams might still need to pair it with orchestration or RAG-specific platforms to handle end-to-end AI agent development workflows.

4. Langfuse

Credits: Langfuse

Langfuse is an open-source observability and evaluation platform purpose-built for LLM applications. Originally focused on helping developers monitor, trace, and evaluate large language model outputs, Langfuse has recently begun expanding into more end-to-end capabilities. However, its core strength remains in providing detailed visibility into LLM behavior, making it a top contender among teams that prioritize monitoring, performance tracking, and continuous evaluation.

Here are some of Langfuse's key features:

Real-Time Tracing and Debugging: Langfuse offers detailed execution traces that allow developers to inspect each step of an LLM interaction, including inputs, outputs, tool calls, and metadata. This tracing capability makes it easier to understand model behavior and debug unexpected results.

Evaluation and Feedback Collection: The platform supports structured evaluations through test datasets, programmatic scoring, and human feedback. This helps teams assess how models are performing over time and validate improvements or changes across different versions.

Custom Metadata, Metrics, and Tagging: Langfuse enables teams to track custom metrics and tag sessions for deeper analytics. This flexibility supports nuanced performance monitoring across different user segments or application flows.

Hosted and Self-Hosted Options: Langfuse can be used as a hosted service or deployed in a self-hosted environment, giving teams control over data and infrastructure based on their privacy and security needs.

Why Choose Langfuse?

Langfuse is an excellent choice for teams that want deep visibility into their LLM-powered apps. Its robust observability features and flexible deployment options make it particularly appealing for developers working in production environments who need to monitor performance closely. That said, for teams looking for a more holistic platform to handle orchestration, prompt iteration, or AI agent development workflows end-to-end, Langfuse may need to be supplemented with additional tools.

Langbase

Credits: Langbase

Langbase is a serverless platform designed for developers building intelligent AI agents with long-term memory, semantic retrieval, and advanced logic handling. While it began as a RAG-focused tool, Langbase has evolved into a broader infrastructure platform for building and scaling AI agents via APIs without requiring teams to manage vector storage, servers, or orchestration layers themselves.

With a sharp focus on developer experience and performance, Langbase enables fast iteration and high scalability for teams who want to stay close to the metal when building AI application development workflows.

Key features:

Serverless Agent Infrastructure: Langbase offers a serverless API for deploying and scaling AI agents. Developers define the agent logic while Langbase handles the execution, scaling, and infrastructure, making it easy to move from prototype to production without provisioning servers or writing deployment scripts.

Memory Agents: At the core of Langbase are memory agents, AI agents capable of retaining context and knowledge over time. These agents are automatically connected to a long-term memory layer, eliminating the need to manually configure vector stores or context windows.

Support for Multiple LLM Providers: Langbase integrates with a wide range of LLM providers and models. This gives teams the flexibility to route requests to different models based on performance, cost, or task-specific behavior.

Semantic Retrieval & Intelligent RAG: Langbase offers strong RAG capabilities, with built-in reranking and semantic search. Developers can connect agents to custom knowledge bases without managing document pipelines or embedding infrastructure manually.

Advanced Agentic Routing and Re-Ranking: Langbase provides intelligent routing for agent queries, allowing for more accurate and relevant outputs. The platform includes reranking features to ensure that retrieved information is optimized before reaching the LLM.

Why Choose Langbase?

Langbase is a strong option for developer-centric teams that want maximum control and efficiency when building AI agents. Its serverless architecture and memory-driven agents make it well-suited for technical users who prioritize performance and scalability. However, the platform’s minimal UI and API-first design may limit collaboration with product managers, QA, or domain experts. Cross-functional teams looking for a more collaborative, end-to-end development environment may need to supplement Langbase with other tools to round out the broader GenAI lifecycle.

Humanloop Alternatives: Key Takeaways

As the sun sets on Humanloop in September 2025, many teams are taking the opportunity to reassess their LLM tooling needs, and for good reason. Whether you're optimizing prompts, scaling agent workflows, or enhancing observability, there’s no one-size-fits-all solution. The right platform depends on your team's technical maturity, collaboration needs, and production goals.

If your focus is developer-centric observability, platforms like Langsmith, Langfuse, and Honeyhive offer robust tools for tracing, evaluation, and debugging LLM workflows. Langbase caters to more technical teams seeking precise control over agent memory and infrastructure via streamlined APIs.

But if your team needs an end-to-end platform that brings together experimentation, orchestration, observability, RAG, and cross-functional collaboration in one place, Orq.ai is built for you.

Book a demo with our team or create a free account to explore how Orq.ai can help you take your GenAI systems from prototype to production and beyond.

Whether you're building AI-powered agents, experimenting with LLM apps, or scaling your AI infrastructure, operational tooling is no longer optional: it’s foundational. As teams move from prototypes to production, the need for robust systems to monitor, evaluate, and iterate on AI workflows has never been greater.

Humanloop has emerged in recent years as a go-to solution for managing LLM workflows, prompt tuning, and evaluation. But with Humanloop officially sunsetting its platform in September 2025, many teams are now evaluating alternatives that can meet their evolving needs in AI agent and application development.

In this blog post, we explore five leading Humanloop alternatives to help your team find the right tool for building, scaling, and evaluating AI systems.

Top 5 Humanloop Alternatives

1. Orq.ai

Evaluators & Guardrails Configuration in Orq.ai

Orq.ai is a Generative AI Collaboration Platform where software teams operate agentic systems at scale. Launched in early 2024, Orq.ai helps AI teams deliver LLM-based applications by filling the gaps traditional DevOps tools weren’t built to handle, namely the need for cross-collaboration between engineers, product teams, business experts.

In a nutshell, Orq.ai enables teams to experiment with GenAI use cases, deploy them to production, and monitor performance, all in one platform. Whether you're building AI copilots, multi-step workflows, or domain-specific agents, Orq.ai enables technical and non-technical contributors to work together seamlessly across the full AI application development lifecycle.

Here's an overview of our platform's core capabilities:

Prompt Engineering & Experimentation: Compare LLM and prompt configurations at scale using our library of evaluators. The platform also supports testing RAG pipelines and running offline evaluations to fine-tune performance before deploying to production.

Serverless Orchestration: With Orq.ai, you can run agentic workflows serverlessly without deploying your backend infrastructure. The platform supports version-controlled templates, logic branches, and parameterized flows, while also offering hosted runtimes that enable built-in routing, error handling, and fallback logic.

Observability & Monitoring: Orq.ai delivers full tracing, session replays, and anomaly detection so teams can gain real visibility into how their systems perform. We also allow you to track usage, cost, latency, and model behavior over time while providing built-in regression testing and debugging tools to help teams troubleshoot issues efficiently.

AI Gateway: You can connect to multiple LLM providers through a single, unified API, making it easy to centralize your model access. Orq.ai also supports provider routing, caching, and cost management, and includes features for data residency, audit logging, and role-based access control.

RAG-as-a-Service: Orq.ai offers RAG-as-a-Service pipelines out of the box. Teams can upload documents with minimal setup, use built-in embedding models, and manage their knowledge bases directly through the API.

Why Choose Orq.ai?

Orq.ai is a solid choice for teams looking to scale AI agent development beyond prototypes. Our end-to-end platform supports everything from experimentation to production monitoring, all while promoting cross-functional collaboration.

Book a demo with our team or create a free account to explore our platform today.

Langsmith

Credits: Langsmith

LangSmith is an observability and evaluation platform built by the creators of LangChain, designed to help developers debug, test, and monitor LLM-powered applications. Tailored specifically for LangChain-based projects, LangSmith makes it easier for teams to track and refine their agents, chains, and prompts throughout the development lifecycle. For teams already committed to the LangChain ecosystem, LangSmith is a natural fit that enhances transparency and control over LLM behavior.

Here's a quick look at Langsmith's main platform capabilities:

Integrated Tracing for LangChain Workflows: LangSmith provides detailed tracing capabilities for LangChain agents and chains, making it easy to visualize and debug multi-step workflows. Developers can inspect each step in the execution pipeline, including inputs, outputs, and intermediate tool calls.

Prompt Versioning and Testing: LangSmith enables prompt versioning and comparison, allowing teams to track changes and evaluate how different prompt iterations affect model outputs. This feature helps with systematic prompt tuning and model behavior analysis.

Evaluation and Feedback Tools: The platform supports structured and unstructured evaluations, including test dataset runs and human feedback collection. This makes it easier to assess performance over time and detect regressions as prompts or chains evolve.

Production Monitoring and Deployment Readiness: LangSmith supports production-grade monitoring with built-in visibility into application health, including response times, performance metrics, and user interactions. This makes it easier for teams to confidently deploy and operate LLM applications at scale.

Metadata Tagging and Custom Metrics: LangSmith allows developers to tag runs with metadata and define custom metrics, giving teams a flexible way to organize and analyze their data across experiments.

API Access and Hosted Platform: LangSmith offers a hosted platform with programmatic access to runs, traces, and evaluation data via API. This enables teams to integrate LangSmith insights into their broader development and monitoring workflows.

Why Choose LangSmith?

LangSmith is a logical choice for teams deeply invested in the LangChain framework and looking for robust tools to monitor and evaluate LLM workflows. Its native integration with LangChain makes it easy to instrument chains and agents without additional overhead. However, for teams building outside the LangChain ecosystem or seeking a broader platform for workflow orchestration, deployment, or RAG capabilities, LangSmith may feel limited in scope. It excels at observability and evaluation but is not intended as a full-stack solution for AI agent development or deployment at scale.

3. Honeyhive

Credits: Honeyhive

Honeyhive is an observability and evaluation platform built to support cross-functional teams building and scaling AI agents and LLM applications. It provides a unified workflow for constructing, testing, debugging, and monitoring Generative AI systems, making it a strong contender in the emerging LLMOps space.

Key features include:

Experimentation and benchmarking: Honeyhive enables you to run structured experiments with custom benchmarks, automating evaluations across prompts, models, and entire agent pipelines. You can track regressions, assign human scores, curate golden datasets, and integrate these checks into CI workflows.

Distributed Tracing & Session Replay: With its OpenTelemetry-based tracer, Honeyhive provides in-depth visibility into application execution. You can inspect distributed traces, custom spans, and session replays to pinpoint failures and performance bottlenecks in multi-step LLM pipelines.

Monitoring, Alerting & Analytics: Honeyhive supports asynchronous logging, custom metrics, and live alerts for production environments. Teams can build custom dashboards, segment data using metadata, and monitor RAG and agent pipelines in real time to catch anomalies and user feedback trends.

Built-In Evaluators & Custom Metrics: The platform provides a library of evaluators, including code-based, LLM-based, and human scoring tools. You can also define custom metrics and embed them directly into tracing spans or evaluation workflows.

Why Choose HoneyHive?

Honeyhive is ideal for teams that require a robust, developer-grade LLMOps stack. It goes far beyond simple prompt testing, offering enterprise-grade observability, automated testing, live monitoring, and feedback annotation. That said, while the platform offers powerful tooling, teams might still need to pair it with orchestration or RAG-specific platforms to handle end-to-end AI agent development workflows.

4. Langfuse

Credits: Langfuse

Langfuse is an open-source observability and evaluation platform purpose-built for LLM applications. Originally focused on helping developers monitor, trace, and evaluate large language model outputs, Langfuse has recently begun expanding into more end-to-end capabilities. However, its core strength remains in providing detailed visibility into LLM behavior, making it a top contender among teams that prioritize monitoring, performance tracking, and continuous evaluation.

Here are some of Langfuse's key features:

Real-Time Tracing and Debugging: Langfuse offers detailed execution traces that allow developers to inspect each step of an LLM interaction, including inputs, outputs, tool calls, and metadata. This tracing capability makes it easier to understand model behavior and debug unexpected results.

Evaluation and Feedback Collection: The platform supports structured evaluations through test datasets, programmatic scoring, and human feedback. This helps teams assess how models are performing over time and validate improvements or changes across different versions.

Custom Metadata, Metrics, and Tagging: Langfuse enables teams to track custom metrics and tag sessions for deeper analytics. This flexibility supports nuanced performance monitoring across different user segments or application flows.

Hosted and Self-Hosted Options: Langfuse can be used as a hosted service or deployed in a self-hosted environment, giving teams control over data and infrastructure based on their privacy and security needs.

Why Choose Langfuse?

Langfuse is an excellent choice for teams that want deep visibility into their LLM-powered apps. Its robust observability features and flexible deployment options make it particularly appealing for developers working in production environments who need to monitor performance closely. That said, for teams looking for a more holistic platform to handle orchestration, prompt iteration, or AI agent development workflows end-to-end, Langfuse may need to be supplemented with additional tools.

Langbase

Credits: Langbase

Langbase is a serverless platform designed for developers building intelligent AI agents with long-term memory, semantic retrieval, and advanced logic handling. While it began as a RAG-focused tool, Langbase has evolved into a broader infrastructure platform for building and scaling AI agents via APIs without requiring teams to manage vector storage, servers, or orchestration layers themselves.

With a sharp focus on developer experience and performance, Langbase enables fast iteration and high scalability for teams who want to stay close to the metal when building AI application development workflows.

Key features:

Serverless Agent Infrastructure: Langbase offers a serverless API for deploying and scaling AI agents. Developers define the agent logic while Langbase handles the execution, scaling, and infrastructure, making it easy to move from prototype to production without provisioning servers or writing deployment scripts.

Memory Agents: At the core of Langbase are memory agents, AI agents capable of retaining context and knowledge over time. These agents are automatically connected to a long-term memory layer, eliminating the need to manually configure vector stores or context windows.

Support for Multiple LLM Providers: Langbase integrates with a wide range of LLM providers and models. This gives teams the flexibility to route requests to different models based on performance, cost, or task-specific behavior.

Semantic Retrieval & Intelligent RAG: Langbase offers strong RAG capabilities, with built-in reranking and semantic search. Developers can connect agents to custom knowledge bases without managing document pipelines or embedding infrastructure manually.

Advanced Agentic Routing and Re-Ranking: Langbase provides intelligent routing for agent queries, allowing for more accurate and relevant outputs. The platform includes reranking features to ensure that retrieved information is optimized before reaching the LLM.

Why Choose Langbase?

Langbase is a strong option for developer-centric teams that want maximum control and efficiency when building AI agents. Its serverless architecture and memory-driven agents make it well-suited for technical users who prioritize performance and scalability. However, the platform’s minimal UI and API-first design may limit collaboration with product managers, QA, or domain experts. Cross-functional teams looking for a more collaborative, end-to-end development environment may need to supplement Langbase with other tools to round out the broader GenAI lifecycle.

Humanloop Alternatives: Key Takeaways

As the sun sets on Humanloop in September 2025, many teams are taking the opportunity to reassess their LLM tooling needs, and for good reason. Whether you're optimizing prompts, scaling agent workflows, or enhancing observability, there’s no one-size-fits-all solution. The right platform depends on your team's technical maturity, collaboration needs, and production goals.

If your focus is developer-centric observability, platforms like Langsmith, Langfuse, and Honeyhive offer robust tools for tracing, evaluation, and debugging LLM workflows. Langbase caters to more technical teams seeking precise control over agent memory and infrastructure via streamlined APIs.

But if your team needs an end-to-end platform that brings together experimentation, orchestration, observability, RAG, and cross-functional collaboration in one place, Orq.ai is built for you.

Book a demo with our team or create a free account to explore how Orq.ai can help you take your GenAI systems from prototype to production and beyond.

Whether you're building AI-powered agents, experimenting with LLM apps, or scaling your AI infrastructure, operational tooling is no longer optional: it’s foundational. As teams move from prototypes to production, the need for robust systems to monitor, evaluate, and iterate on AI workflows has never been greater.

Humanloop has emerged in recent years as a go-to solution for managing LLM workflows, prompt tuning, and evaluation. But with Humanloop officially sunsetting its platform in September 2025, many teams are now evaluating alternatives that can meet their evolving needs in AI agent and application development.

In this blog post, we explore five leading Humanloop alternatives to help your team find the right tool for building, scaling, and evaluating AI systems.

Top 5 Humanloop Alternatives

1. Orq.ai

Evaluators & Guardrails Configuration in Orq.ai

Orq.ai is a Generative AI Collaboration Platform where software teams operate agentic systems at scale. Launched in early 2024, Orq.ai helps AI teams deliver LLM-based applications by filling the gaps traditional DevOps tools weren’t built to handle, namely the need for cross-collaboration between engineers, product teams, business experts.

In a nutshell, Orq.ai enables teams to experiment with GenAI use cases, deploy them to production, and monitor performance, all in one platform. Whether you're building AI copilots, multi-step workflows, or domain-specific agents, Orq.ai enables technical and non-technical contributors to work together seamlessly across the full AI application development lifecycle.

Here's an overview of our platform's core capabilities:

Prompt Engineering & Experimentation: Compare LLM and prompt configurations at scale using our library of evaluators. The platform also supports testing RAG pipelines and running offline evaluations to fine-tune performance before deploying to production.

Serverless Orchestration: With Orq.ai, you can run agentic workflows serverlessly without deploying your backend infrastructure. The platform supports version-controlled templates, logic branches, and parameterized flows, while also offering hosted runtimes that enable built-in routing, error handling, and fallback logic.

Observability & Monitoring: Orq.ai delivers full tracing, session replays, and anomaly detection so teams can gain real visibility into how their systems perform. We also allow you to track usage, cost, latency, and model behavior over time while providing built-in regression testing and debugging tools to help teams troubleshoot issues efficiently.

AI Gateway: You can connect to multiple LLM providers through a single, unified API, making it easy to centralize your model access. Orq.ai also supports provider routing, caching, and cost management, and includes features for data residency, audit logging, and role-based access control.

RAG-as-a-Service: Orq.ai offers RAG-as-a-Service pipelines out of the box. Teams can upload documents with minimal setup, use built-in embedding models, and manage their knowledge bases directly through the API.

Why Choose Orq.ai?

Orq.ai is a solid choice for teams looking to scale AI agent development beyond prototypes. Our end-to-end platform supports everything from experimentation to production monitoring, all while promoting cross-functional collaboration.

Book a demo with our team or create a free account to explore our platform today.

Langsmith

Credits: Langsmith

LangSmith is an observability and evaluation platform built by the creators of LangChain, designed to help developers debug, test, and monitor LLM-powered applications. Tailored specifically for LangChain-based projects, LangSmith makes it easier for teams to track and refine their agents, chains, and prompts throughout the development lifecycle. For teams already committed to the LangChain ecosystem, LangSmith is a natural fit that enhances transparency and control over LLM behavior.

Here's a quick look at Langsmith's main platform capabilities:

Integrated Tracing for LangChain Workflows: LangSmith provides detailed tracing capabilities for LangChain agents and chains, making it easy to visualize and debug multi-step workflows. Developers can inspect each step in the execution pipeline, including inputs, outputs, and intermediate tool calls.

Prompt Versioning and Testing: LangSmith enables prompt versioning and comparison, allowing teams to track changes and evaluate how different prompt iterations affect model outputs. This feature helps with systematic prompt tuning and model behavior analysis.

Evaluation and Feedback Tools: The platform supports structured and unstructured evaluations, including test dataset runs and human feedback collection. This makes it easier to assess performance over time and detect regressions as prompts or chains evolve.

Production Monitoring and Deployment Readiness: LangSmith supports production-grade monitoring with built-in visibility into application health, including response times, performance metrics, and user interactions. This makes it easier for teams to confidently deploy and operate LLM applications at scale.

Metadata Tagging and Custom Metrics: LangSmith allows developers to tag runs with metadata and define custom metrics, giving teams a flexible way to organize and analyze their data across experiments.

API Access and Hosted Platform: LangSmith offers a hosted platform with programmatic access to runs, traces, and evaluation data via API. This enables teams to integrate LangSmith insights into their broader development and monitoring workflows.

Why Choose LangSmith?

LangSmith is a logical choice for teams deeply invested in the LangChain framework and looking for robust tools to monitor and evaluate LLM workflows. Its native integration with LangChain makes it easy to instrument chains and agents without additional overhead. However, for teams building outside the LangChain ecosystem or seeking a broader platform for workflow orchestration, deployment, or RAG capabilities, LangSmith may feel limited in scope. It excels at observability and evaluation but is not intended as a full-stack solution for AI agent development or deployment at scale.

3. Honeyhive

Credits: Honeyhive

Honeyhive is an observability and evaluation platform built to support cross-functional teams building and scaling AI agents and LLM applications. It provides a unified workflow for constructing, testing, debugging, and monitoring Generative AI systems, making it a strong contender in the emerging LLMOps space.

Key features include:

Experimentation and benchmarking: Honeyhive enables you to run structured experiments with custom benchmarks, automating evaluations across prompts, models, and entire agent pipelines. You can track regressions, assign human scores, curate golden datasets, and integrate these checks into CI workflows.

Distributed Tracing & Session Replay: With its OpenTelemetry-based tracer, Honeyhive provides in-depth visibility into application execution. You can inspect distributed traces, custom spans, and session replays to pinpoint failures and performance bottlenecks in multi-step LLM pipelines.

Monitoring, Alerting & Analytics: Honeyhive supports asynchronous logging, custom metrics, and live alerts for production environments. Teams can build custom dashboards, segment data using metadata, and monitor RAG and agent pipelines in real time to catch anomalies and user feedback trends.

Built-In Evaluators & Custom Metrics: The platform provides a library of evaluators, including code-based, LLM-based, and human scoring tools. You can also define custom metrics and embed them directly into tracing spans or evaluation workflows.

Why Choose HoneyHive?

Honeyhive is ideal for teams that require a robust, developer-grade LLMOps stack. It goes far beyond simple prompt testing, offering enterprise-grade observability, automated testing, live monitoring, and feedback annotation. That said, while the platform offers powerful tooling, teams might still need to pair it with orchestration or RAG-specific platforms to handle end-to-end AI agent development workflows.

4. Langfuse

Credits: Langfuse

Langfuse is an open-source observability and evaluation platform purpose-built for LLM applications. Originally focused on helping developers monitor, trace, and evaluate large language model outputs, Langfuse has recently begun expanding into more end-to-end capabilities. However, its core strength remains in providing detailed visibility into LLM behavior, making it a top contender among teams that prioritize monitoring, performance tracking, and continuous evaluation.

Here are some of Langfuse's key features:

Real-Time Tracing and Debugging: Langfuse offers detailed execution traces that allow developers to inspect each step of an LLM interaction, including inputs, outputs, tool calls, and metadata. This tracing capability makes it easier to understand model behavior and debug unexpected results.

Evaluation and Feedback Collection: The platform supports structured evaluations through test datasets, programmatic scoring, and human feedback. This helps teams assess how models are performing over time and validate improvements or changes across different versions.

Custom Metadata, Metrics, and Tagging: Langfuse enables teams to track custom metrics and tag sessions for deeper analytics. This flexibility supports nuanced performance monitoring across different user segments or application flows.

Hosted and Self-Hosted Options: Langfuse can be used as a hosted service or deployed in a self-hosted environment, giving teams control over data and infrastructure based on their privacy and security needs.

Why Choose Langfuse?

Langfuse is an excellent choice for teams that want deep visibility into their LLM-powered apps. Its robust observability features and flexible deployment options make it particularly appealing for developers working in production environments who need to monitor performance closely. That said, for teams looking for a more holistic platform to handle orchestration, prompt iteration, or AI agent development workflows end-to-end, Langfuse may need to be supplemented with additional tools.

Langbase

Credits: Langbase

Langbase is a serverless platform designed for developers building intelligent AI agents with long-term memory, semantic retrieval, and advanced logic handling. While it began as a RAG-focused tool, Langbase has evolved into a broader infrastructure platform for building and scaling AI agents via APIs without requiring teams to manage vector storage, servers, or orchestration layers themselves.

With a sharp focus on developer experience and performance, Langbase enables fast iteration and high scalability for teams who want to stay close to the metal when building AI application development workflows.

Key features:

Serverless Agent Infrastructure: Langbase offers a serverless API for deploying and scaling AI agents. Developers define the agent logic while Langbase handles the execution, scaling, and infrastructure, making it easy to move from prototype to production without provisioning servers or writing deployment scripts.

Memory Agents: At the core of Langbase are memory agents, AI agents capable of retaining context and knowledge over time. These agents are automatically connected to a long-term memory layer, eliminating the need to manually configure vector stores or context windows.

Support for Multiple LLM Providers: Langbase integrates with a wide range of LLM providers and models. This gives teams the flexibility to route requests to different models based on performance, cost, or task-specific behavior.

Semantic Retrieval & Intelligent RAG: Langbase offers strong RAG capabilities, with built-in reranking and semantic search. Developers can connect agents to custom knowledge bases without managing document pipelines or embedding infrastructure manually.

Advanced Agentic Routing and Re-Ranking: Langbase provides intelligent routing for agent queries, allowing for more accurate and relevant outputs. The platform includes reranking features to ensure that retrieved information is optimized before reaching the LLM.

Why Choose Langbase?

Langbase is a strong option for developer-centric teams that want maximum control and efficiency when building AI agents. Its serverless architecture and memory-driven agents make it well-suited for technical users who prioritize performance and scalability. However, the platform’s minimal UI and API-first design may limit collaboration with product managers, QA, or domain experts. Cross-functional teams looking for a more collaborative, end-to-end development environment may need to supplement Langbase with other tools to round out the broader GenAI lifecycle.

Humanloop Alternatives: Key Takeaways

As the sun sets on Humanloop in September 2025, many teams are taking the opportunity to reassess their LLM tooling needs, and for good reason. Whether you're optimizing prompts, scaling agent workflows, or enhancing observability, there’s no one-size-fits-all solution. The right platform depends on your team's technical maturity, collaboration needs, and production goals.

If your focus is developer-centric observability, platforms like Langsmith, Langfuse, and Honeyhive offer robust tools for tracing, evaluation, and debugging LLM workflows. Langbase caters to more technical teams seeking precise control over agent memory and infrastructure via streamlined APIs.

But if your team needs an end-to-end platform that brings together experimentation, orchestration, observability, RAG, and cross-functional collaboration in one place, Orq.ai is built for you.

Book a demo with our team or create a free account to explore how Orq.ai can help you take your GenAI systems from prototype to production and beyond.

FAQ

What should teams look for when choosing an alternative to Humanloop?

How do effective LLMOps platforms support collaboration across different roles?

What are the key capabilities needed for prompt evaluation and experimentation?

How can teams ensure reliable AI application performance after deployment?

Is it necessary to manage infrastructure when working with LLMOps platforms?